Why Transformers Power Modern Large Language Models: The Core Concepts You Need

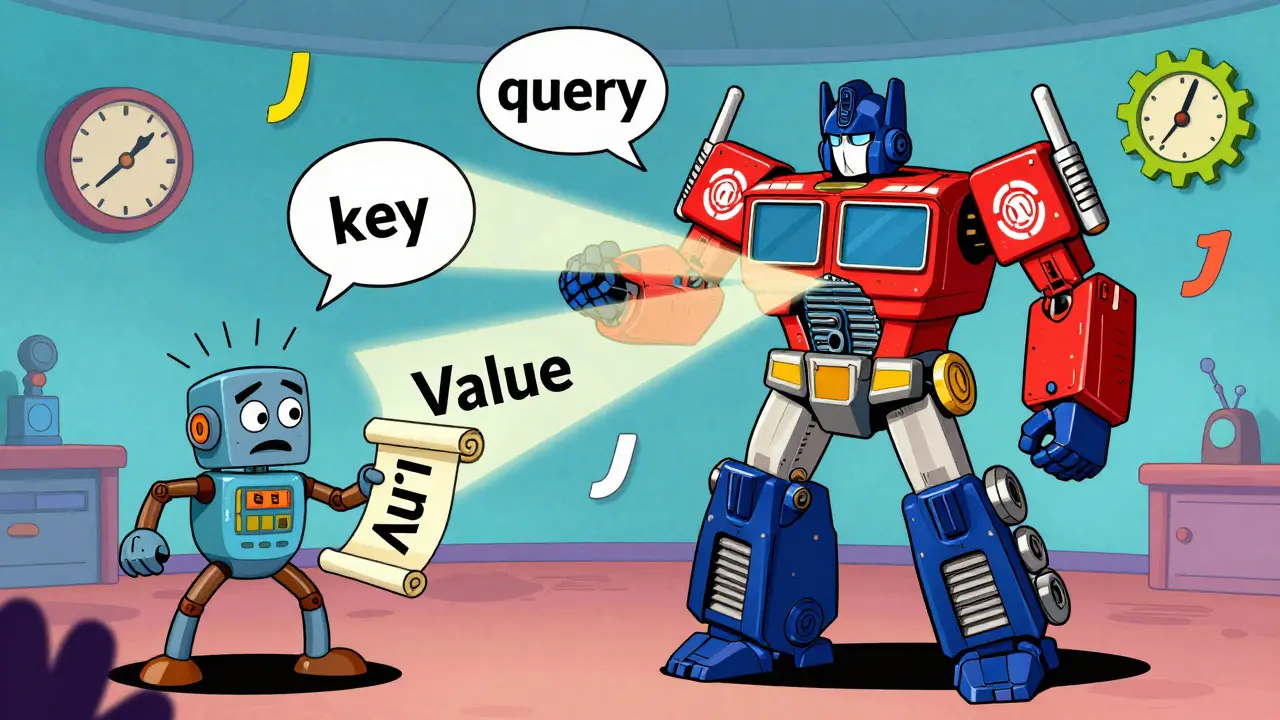

Transformers revolutionized AI by letting language models understand context instantly. Learn how self-attention, positional encoding, and multi-head attention power today’s top LLMs - and why they’re replacing older models.