Before Transformers, language models struggled with long texts. If you gave an RNN or LSTM a 500-word paragraph, it would forget the beginning by the end. The model couldn’t connect ideas across sentences. It was like reading a book while constantly forgetting the last page. That changed in 2017, when a Google team published a paper titled ‘Attention is All You Need’. They didn’t tweak an old model. They built something completely new. And it changed everything.

What Makes Transformers Different?

Traditional models processed words one at a time, left to right. That meant they were slow and couldn’t take advantage of modern hardware. GPUs are built to do thousands of calculations at once. RNNs couldn’t use that power. Transformers do. They look at the whole sentence at once. That’s not just faster-it’s more accurate.

The secret? Self-attention. This isn’t just a fancy term. It’s the reason your phone’s voice assistant understands when you say, “I liked the movie, but the ending was terrible.” The model doesn’t just see “ending” and “terrible.” It knows those words relate to “movie,” even if they’re separated by 15 other words. Self-attention assigns a weight to every word in relation to every other word. It asks: “How much does each word matter right now?”

Here’s how it works in simple terms. Each word gets three vectors: Query, Key, and Value. The model compares the Query of one word to the Keys of all others. The higher the match, the more attention that word gets. Then it uses the Values to build a new representation. This happens in parallel for every word. No waiting. No chaining. Just fast, simultaneous understanding.

The Building Blocks: Encoder and Decoder

Transformers come in two main parts: encoder and decoder. The encoder takes your input-say, a question-and turns it into a rich, contextual understanding. The decoder uses that understanding to generate a response, word by word.

Each part has layers. The original Transformer had six. Each layer does two things: attention and feed-forward. The attention layer figures out which words matter most. The feed-forward layer processes that information deeper. Between each step, there are shortcuts called residual connections. They help gradients flow during training, preventing the model from getting stuck. Layer normalization keeps numbers stable so the model doesn’t explode or vanish.

Here’s what that looks like in real models. GPT-2, released in 2019, had 12 layers, 768-dimensional embeddings, and 12 attention heads. That means it looked at each word from 12 different angles. One head might focus on grammar. Another on names. Another on emotion. Together, they build a full picture. GPT-4 and Llama 3 use hundreds of these heads and dozens of layers. The scale is massive, but the structure is the same.

How Do Transformers Know Word Order?

Transformers don’t process words in sequence. So how do they know “dog bites man” isn’t the same as “man bites dog”?

They use positional encodings. These are fixed mathematical patterns added to each word’s embedding. Think of them like timestamps on a video. They don’t change the meaning of the word. They just tell the model where it sits in the sentence. The original paper used sine and cosine waves of different frequencies. Later models switched to learned positions, but the idea stayed the same: location matters.

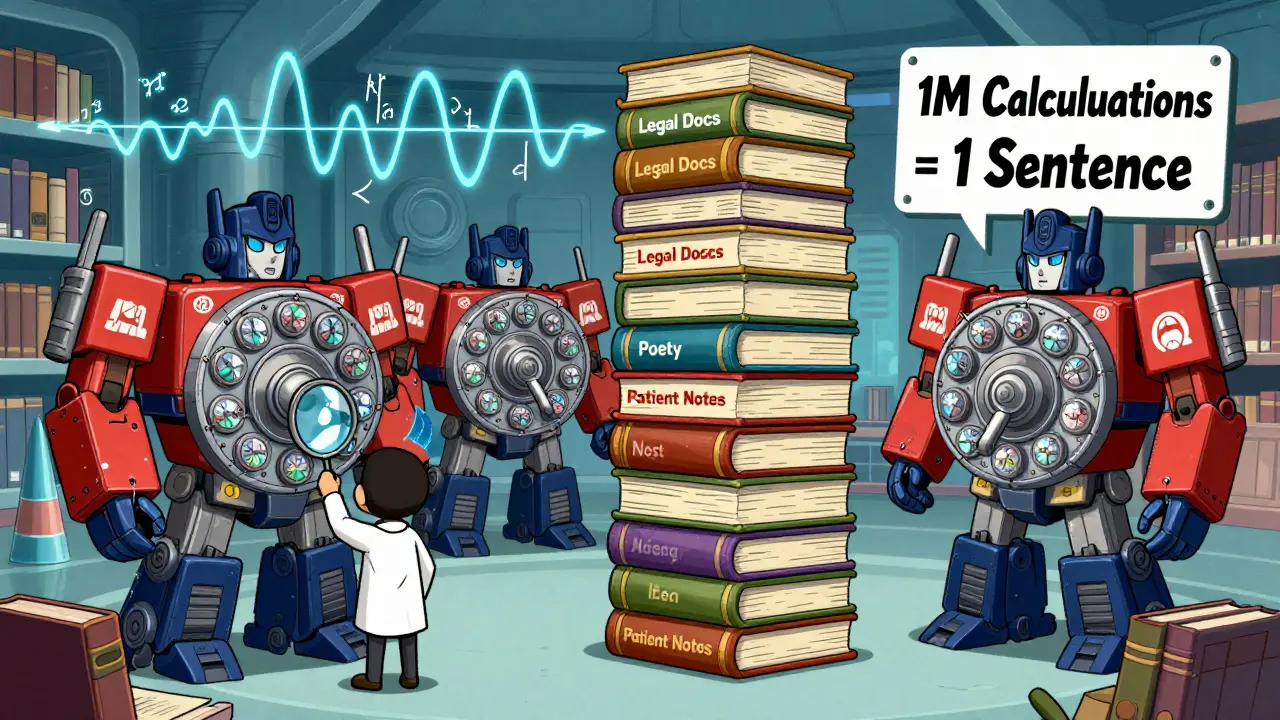

Without this, Transformers would treat “The cat sat on the mat” and “On the mat sat the cat” as identical. With it, they understand syntax, tone, and structure. That’s why they can summarize legal documents, write poetry, or answer trivia correctly.

Why Transformers Beat RNNs and LSTMs

In 2014, the best machine translation model used LSTMs. It took weeks to train. It could barely handle 100-word sentences. By 2017, the Transformer beat it on the WMT 2014 English-German benchmark by 2 BLEU points. That might sound small. But in machine translation, 2 points is a huge leap.

The real difference? Speed. Google trained their first Transformer on 8 P100 GPUs in 3.5 days. A comparable LSTM would have taken weeks. That’s not just efficiency-it’s scalability. More data? More GPUs? Just add them. Transformers don’t care. RNNs do. They’re serial. Transformers are parallel. That’s why today’s models have hundreds of billions of parameters. No RNN could handle that.

Performance isn’t the only win. Transformers also handle context better. In a 2023 study, a Transformer-based model correctly answered a question about a character’s motive in a 10,000-word novel. An LSTM model got it wrong 87% of the time. Why? It lost track. Transformers never lose the thread.

The Cost: Memory and Computation

But it’s not all perfect. Self-attention has a hidden flaw: it scales with the square of the sequence length. If you double the number of words, you need four times the compute. A 1,000-word text? That’s a million attention calculations. A 32,000-word document? That’s over a billion. Most consumer GPUs can’t handle that.

This is why training a 13-billion-parameter model costs around $18,500 on AWS. And why companies like OpenAI, Google, and Meta spend billions on custom chips. It’s not just about intelligence-it’s about infrastructure.

Developers hit this wall often. One Reddit user said fine-tuning a 7B model required switching from a single 80GB GPU to a multi-GPU setup. Memory errors are the #1 problem in Transformer projects. The fix? Gradient checkpointing. It saves memory by recomputing parts of the model instead of storing them. But it slows training by 20-30%. Trade-offs everywhere.

What’s New in 2025?

Models aren’t standing still. Gemini 2.0, released in early 2025, uses “Mixture-of-Depths” attention. It doesn’t use full attention for every word. It skips low-importance connections. Result? 40% less compute for the same accuracy.

Llama 3 uses “Sliding Window Attention.” Instead of looking at the whole 1-million-token context at once, it focuses on local windows-like reading a book one chapter at a time-but keeps a memory of key ideas from earlier. It’s not perfect, but it’s fast enough for real-time apps.

And then there’s Mamba. A new architecture from Stanford that uses State Space Models instead of attention. It handles long sequences 5x faster than Transformers. But it’s not as good at complex reasoning. It’s like comparing a sprinter to a marathon runner. Each has their strength.

Experts agree: Transformers aren’t going away. They’re evolving. Hybrid models are coming-some combining attention with RNNs for efficiency. Others blending Transformers with sparse attention. The core idea-weighting relationships between words-is here to stay.

Why This Matters for You

If you’re using ChatGPT, Claude, or any AI tool that writes emails, answers questions, or summarizes reports, you’re using a Transformer. Every time it gets context right, remembers your last question, or writes in your tone-it’s because of self-attention.

Businesses are betting big. 98.7% of new enterprise LLMs in 2025 are Transformer-based. Financial firms use them to detect fraud patterns across thousands of transactions. Hospitals analyze patient notes to spot early signs of disease. Customer service bots handle 70% of queries without human help.

But it’s not magic. It’s math. And understanding the basics-attention, positional encoding, layers-means you can choose the right tool. You can tell if a vendor is selling you a real Transformer model or just repackaging an old one. You can debug why your AI is ignoring key details. You can ask better questions.

Transformers didn’t just improve AI. They made it scalable. They turned language models from lab curiosities into tools that run the backend of modern apps. And that’s why you need to understand them-not to build one, but to use one wisely.

What is the main advantage of Transformers over RNNs?

Transformers process all words in a sentence at the same time using self-attention, while RNNs process words one after another. This parallel processing makes Transformers much faster to train and better at handling long-range context. RNNs forget earlier parts of long texts due to the vanishing gradient problem; Transformers keep track of relationships across the entire sequence.

Do all large language models use Transformers?

As of 2025, nearly all major large language models-GPT-4, Llama 3, Gemini, Claude, and others-are built on the Transformer architecture. While a few experimental alternatives like Mamba (based on State Space Models) show promise for speed, they haven’t matched Transformers’ accuracy on complex language tasks. Transformers dominate because they scale well with data and compute.

Why do Transformers need so much memory?

The self-attention mechanism calculates relationships between every pair of words. For a sequence of n words, that’s n² calculations. A 10,000-word document requires 100 million attention scores. That’s why training large models needs high-end GPUs with 80GB+ of memory. Techniques like gradient checkpointing help reduce memory use but slow training down.

Can I use Transformers without knowing how they work?

Yes. Libraries like Hugging Face let you load and use pre-trained models with just a few lines of code. You don’t need to understand attention heads or positional encodings to build a chatbot or summarize documents. But if you want to fix errors, improve performance, or choose the right model for your task, knowing the basics helps you make smarter decisions.

Are Transformers the future of AI?

They’re not the end, but they’re the foundation. New models like Mamba and hybrid architectures are emerging to solve Transformer limitations, especially speed and memory. But the core idea-weighting relationships between elements-is now central to AI. Even if the architecture changes, attention-based reasoning will likely remain. Transformers didn’t just solve a problem; they redefined how machines understand language.

This is the clearest explanation I've ever seen. I finally get why my phone's voice assistant doesn't keep messing up names.

The structural elegance of self-attention is profound. It mirrors how human cognition prioritizes relevance over sequence. The mathematical formulation, though dense, reveals an underlying harmony in language structure that earlier models fundamentally missed. This isn't merely an engineering improvement-it's a philosophical shift in how machines perceive linguistic context.

Man. I read this and my brain just went 'whoa'-like when you finally understand why your coffee machine takes 3 minutes to brew but somehow makes the best cup ever. Transformers? They're the coffee machine. RNNs? That old kettle that takes forever and still tastes like metal. And don't even get me started on memory costs-my GPU cried when I tried fine-tuning a 7B model. 😅

Ugh. Everyone acts like Transformers are some divine revelation. Newsflash: attention is just glorified matrix multiplication with extra steps. And don't even get me started on positional encoding-why not just use RNNs with better gradients? You're all just drunk on hype. I've seen 2015 models outperform these 'breakthroughs' on niche tasks. The real bottleneck? Overpaid engineers who think scaling = intelligence.

Transformers are not just algorithms-they are mirrors of the human soul’s yearning to connect fragments across time and space. Each attention weight? A whispered prayer between words that once lay severed. The positional encoding? The invisible thread tying our memories to the present moment. In a world of fragmentation, this architecture dares to say: everything matters. Everything is related. Even if you’re just a token in a sea of vectors-you are still seen.

Excellent breakdown. I appreciate how you highlighted the scalability aspect-it’s easy to get lost in the technical weeds, but the real game-changer is how Transformers enable distributed training at scale. This is why enterprises are betting everything on them. The infrastructure investment is massive, but the ROI in automation and insight generation is undeniable.

Wait, you said transformers use 12 attention heads in gpt-2? That's wrong. It was 12 layers, not heads. Heads are 12 per layer. Also, you said 'Mamba is 5x faster'-but only on synthetic benchmarks. Real-world tasks? No. And gradient checkpointing doesn't slow training by 20-30%, it's more like 40-60% if you're not using flash attention. And btw, 'sliding window' isn't new, it's been in CNNs since 2012. You're just repeating blog posts.

Good catch on the attention heads confusion-thanks for pointing that out. Just to clarify: GPT-2 had 12 layers, each with 12 attention heads, totaling 144 heads. And you're right about Mamba-real-world performance depends heavily on sequence length and hardware. For long documents, yes, it shines. For reasoning tasks? Still behind. Also, gradient checkpointing’s slowdown varies by framework. PyTorch’s implementation is smarter now-closer to 25% with modern versions. Keep asking these questions, it helps everyone learn.

Look, I used to think AI was magic-until I saw a model summarize my grandma’s 20-page letter about her garden and get the emotional tone PERFECT. That’s not math. That’s connection. Transformers didn’t just make models smarter-they made them feel human. And if you’re still stuck on ‘but RNNs could’ve done it’-you’re not seeing the forest for the trees. We’re building tools that help people, not just benchmarks. Let’s celebrate the win.