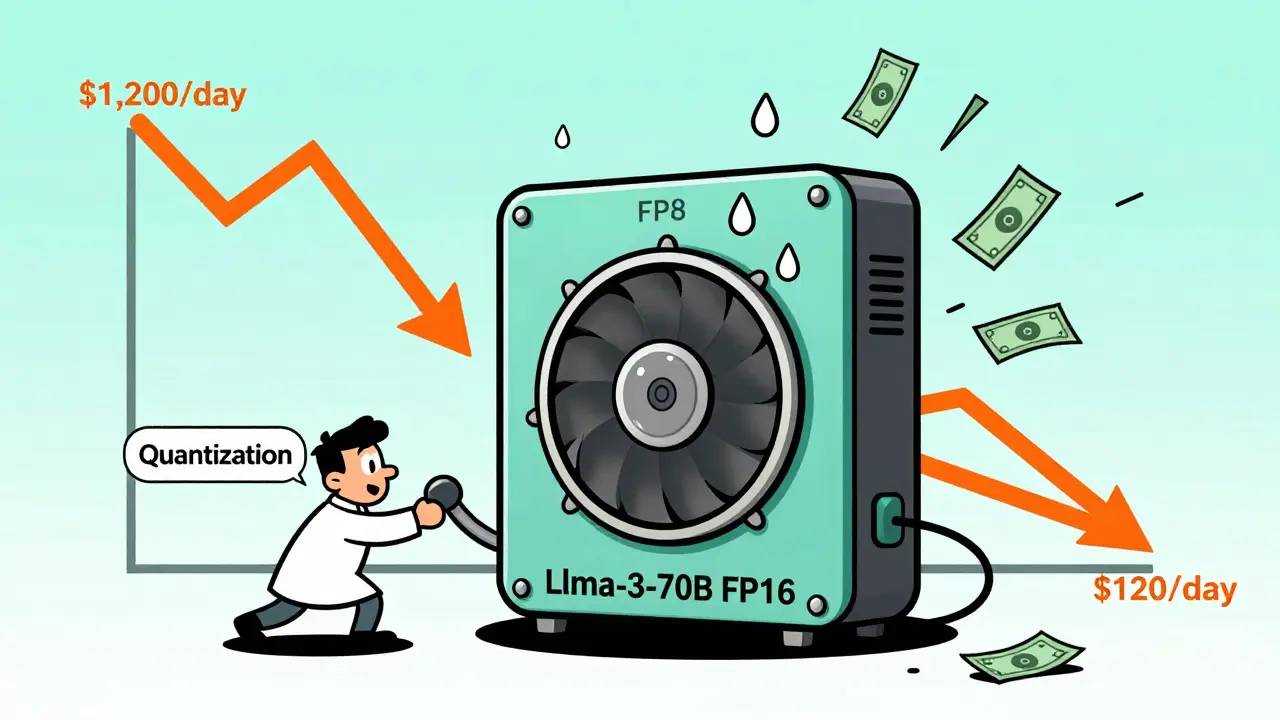

Cost-Performance Tuning for Open-Source LLM Inference: How to Slash Costs Without Losing Quality

Learn how to cut LLM inference costs by 70-90% using open-source tools like vLLM, quantization, and Multi-LoRA-without sacrificing performance. Real-world strategies for startups and enterprises.