When you ask an AI chatbot to write a contract, generate a product description, or even draft a job application, who’s really on the hook if something goes wrong? That’s the question businesses, lawyers, and everyday users are scrambling to answer in 2026. Generative AI isn’t just a tool anymore-it’s a participant in decision-making, content creation, and even legal transactions. And with that shift comes a legal minefield. The old rules don’t fit. You can’t blame the user. You can’t hide behind the code. And you definitely can’t pretend the vendor doesn’t bear some responsibility. The truth? Liability for generative AI is split, messy, and actively being rewritten by courts and state legislatures right now.

Who’s Really in Charge: The Vendor’s Burden

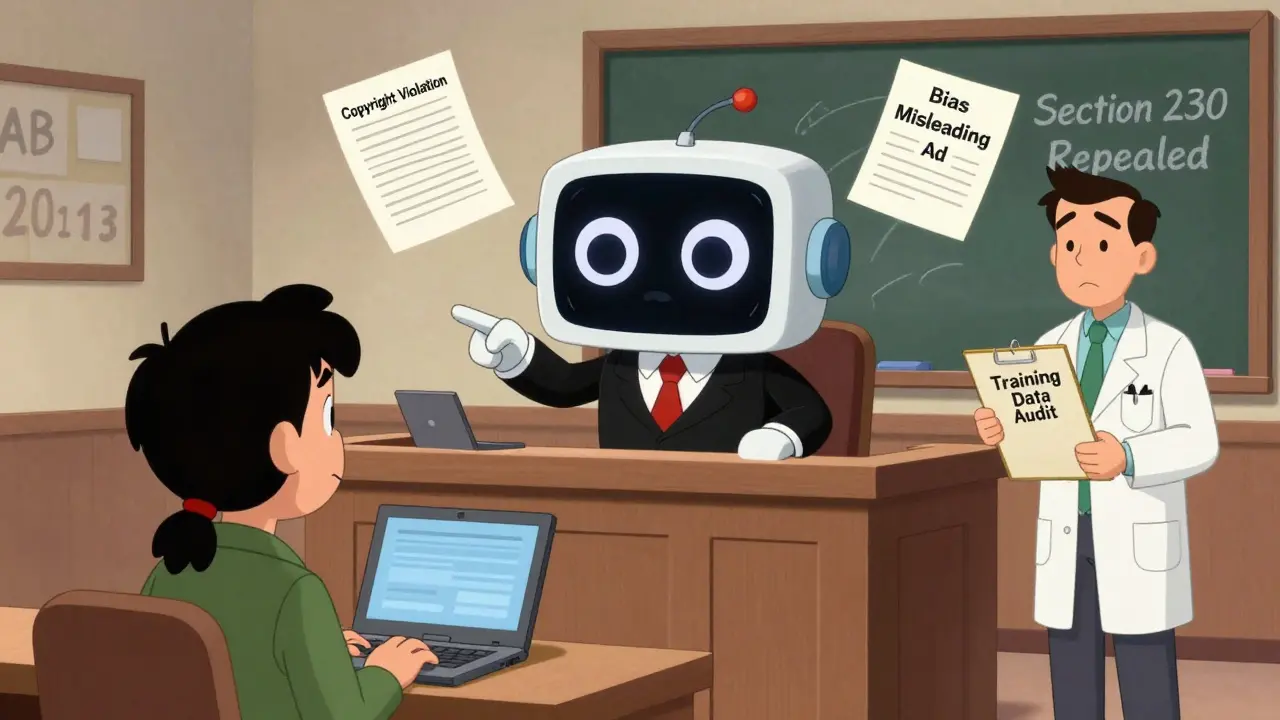

Think of the AI vendor as the manufacturer of a car. If the brakes fail because the design was flawed, you don’t blame the driver-you sue the maker. That’s the direction regulators are moving. California’s AB 2013, which took effect in January 2026, forces AI developers to publicly disclose the datasets used to train their models. This isn’t just a transparency move-it’s a liability trigger. If a model is trained on stolen artwork, copyrighted text, or private medical records, and that content shows up in outputs, the vendor is on the hook. The New York Times v. OpenAI case is still pending, but early signals suggest courts may not accept "fair use" as a blanket defense for training on copyrighted material. The Anthropic settlement, worth $1.5 billion, proves this isn’t theoretical. Companies that used Anthropic’s models now face lawsuits because the training data included unlicensed content. Vendors can’t just say "we didn’t know" anymore. They’re expected to audit their data sources, document their training pipelines, and prove they didn’t knowingly use pirated or private data.Platforms Can’t Hide Behind Section 230 Anymore

For years, online platforms like social media sites and forums got away with not being responsible for what users posted. Section 230 of the Communications Decency Act shielded them. But generative AI doesn’t just host content-it creates it. And that changes everything. Courts are starting to apply the logic from Fair Housing Council v. Roommates.com, where a platform was held liable because it actively structured user responses to discriminate. Now, if a platform uses AI to auto-generate job listings that exclude women or older applicants, or to create fake reviews that push certain products, regulators say that’s not neutrality-it’s active participation. New York’s AI regulations make this explicit: if your platform uses AI to produce content, you’re treated like a publisher, not a host. That means you can be sued directly for defamation, discrimination, or fraud caused by AI outputs. The FTC and EEOC have already fined companies for AI-driven hiring tools that systematically screened out applicants with disabilities. No one’s getting a free pass anymore.Users Aren’t Innocent Bystanders

Just because you didn’t build the AI doesn’t mean you’re off the hook. California’s AB 316, effective in 2026, bans the "autonomous-harm defense." That means if you use an AI to write a misleading ad, generate fake medical advice, or automate a customer service response that violates a consumer protection law, you can’t say "it was the AI’s fault." The law assumes you chose to use it, you understood its purpose, and you’re responsible for how you deployed it. Think about a small business owner who uses a free AI tool to draft emails to customers. If one of those emails falsely claims a product has FDA approval, the business owner gets sued-not the tool’s developer. Utah’s AI Policy Act reinforces this by requiring clear disclosure when users interact with AI. If you don’t tell people they’re talking to a bot, and they rely on bad advice, you’re liable. Users now have a duty to use AI responsibly. Ignorance isn’t a legal defense.

High-Risk vs. Low-Risk: A Tiered System Is Taking Shape

Regulators aren’t treating every AI the same. A startup using AI to generate social media captions faces different rules than a bank using AI to approve loans or a hospital using it to triage patients. A hybrid liability framework is emerging, where obligations scale with risk and company size. High-risk systems-those used in healthcare, finance, hiring, housing, or law enforcement-must meet strict standards: documented risk assessments, bias testing, human oversight, and real-time monitoring. California’s SB 53 allows penalties up to $1 million per violation for these systems. Smaller companies aren’t exempt, but their requirements are scaled. A local bakery using AI to suggest menu items doesn’t need the same compliance team as a Fortune 500 company. The principle is simple: the more harm an AI can cause, the more responsibility you carry. This isn’t just fair-it’s practical. It keeps innovation alive for small players while holding big tech accountable.Transparency Isn’t Optional-It’s the Law

If you can’t tell if something was written by a human or an AI, the system has failed. That’s why states are mandating clear labeling. Utah requires watermarks, AI detection tools, and visible disclosures whenever a consumer interacts with generative AI. California, New York, and others are pushing similar rules. This isn’t about marketing-it’s about informed consent. If someone thinks they’re reading a real news article, a real medical diagnosis, or a real legal opinion, and it’s all AI-generated, they’ve been misled. The law now treats that as fraud. Companies are building AI detection into their products by default. Chatbots now say "I’m an AI assistant" upfront. Product descriptions include "Generated with AI" footnotes. Email signatures add disclaimers. These aren’t nice-to-haves anymore. They’re legal requirements. Skip them, and you’re opening yourself to lawsuits under consumer protection laws.Copyright and Data Integrity Are Now Supply Chain Risks

Your AI vendor’s training data is your problem too. If your company uses a third-party AI model trained on stolen data, and that model spits out infringing content, you’re not off the hook. That’s why companies are adding "Data Integrity Attestation" to their vendor risk assessments. Before signing a contract with an AI provider, legal teams now ask: "Can you prove you didn’t use copyrighted or private data?" The Anthropic settlement shows this isn’t hypothetical. One company’s oversight led to $1.5 billion in settlements. Now, procurement teams are reviewing AI vendors like they’re reviewing suppliers of hazardous materials. Contracts include indemnity clauses that force vendors to cover legal costs if their training data causes harm. This is shifting liability back to the source-and forcing vendors to clean up their data practices or lose business.

Autonomous Agents Are the Next Frontier

AI is moving beyond chatbots. Now, agents can book flights, sign contracts, and even place orders on your behalf. What happens if an AI agent books a $50,000 event space you never approved? Or signs a contract that locks you into an unfair deal? Courts haven’t ruled yet, but early legal opinions suggest users may be bound by their AI’s actions if they gave the agent authority. That’s why smart companies are updating their contracts to explicitly say: "We are not liable for losses caused by autonomous AI agents unless we directly approved the action." This is uncharted territory. Until courts set precedent, the safest move is to limit what autonomous agents can do-and always require human approval for high-value transactions.What You Need to Do Right Now

If you’re using generative AI in any business context, here’s what you must do by August 2, 2026:- Review every AI tool you use-ask vendors for data provenance reports and training disclosures.

- Implement clear labeling on all AI-generated content-no exceptions.

- Train employees on responsible AI use-no "just copy and paste" policies.

- Update contracts with vendors to include liability clauses for autonomous actions and data breaches.

- Conduct bias and risk assessments for any AI used in hiring, lending, or customer service.

- Document everything-your audit trail is your legal shield.

The rules are changing fast. What was a gray area last year is now a violation today. The courts aren’t waiting. Regulators aren’t bluffing. And users aren’t staying quiet. Liability for generative AI isn’t a future problem-it’s today’s compliance challenge. Ignore it, and you’re not just risking fines. You’re risking your reputation, your lawsuits, and your business.

Can I be sued if my AI tool generates false information?

Yes. Under California’s AB 316 and similar laws, you can’t claim "the AI did it" as a defense. If you deployed the tool, you’re responsible for its outputs. Courts are treating AI-generated content like any other business communication-false claims, misleading statements, or harmful advice can lead to lawsuits for fraud, defamation, or consumer protection violations.

Do I need to label AI content even if it’s internal use?

For internal use, labeling isn’t always legally required-but it’s still smart. If an employee relies on AI-generated data to make a business decision and it turns out wrong, you could face internal liability. Plus, many states require labeling for public-facing content, and internal use can easily become public. Best practice: label everything.

What if I use a free AI tool like ChatGPT?

Free tools don’t make you immune. If you use ChatGPT to draft a contract, generate a marketing campaign, or respond to customers, you’re still liable for the output. The vendor isn’t responsible-you are. Free doesn’t mean risk-free. Always assume you’re on the hook.

Can my company be fined for AI discrimination?

Absolutely. The EEOC and FTC have fined multiple companies for AI hiring tools that discriminated based on gender, race, or disability. Even if you didn’t build the tool, using it in employment or lending decisions makes you responsible. You must test for bias and document your safeguards.

Is there a federal law on AI liability yet?

Not yet. But federal agencies like the FTC, EEOC, and DOJ are enforcing existing laws-civil rights, consumer protection, employment law-as if they already apply to AI. So while there’s no single AI law, you’re still covered by dozens of others. Waiting for federal rules is risky. State laws are already active and enforceable.

Let’s be real-the whole liability puzzle is less about who’s at fault and more about who had the resources to anticipate the mess. Vendors absolutely should be held accountable for training on stolen data, but let’s not pretend users are innocent. I’ve seen small businesses use free AI tools to draft legal letters, then act shocked when they get sued. The law’s catching up, sure, but culture hasn’t. We still treat AI like a magic box you whisper into and get perfect results. It’s not. It’s a tool with blind spots, biases, and hidden dependencies. The real shift isn’t in legislation-it’s in mindset. You wouldn’t hand a 12-year-old a chainsaw and say "just be careful." Why do we do this with AI? Transparency, documentation, training-these aren’t compliance checkboxes. They’re survival skills now.

And don’t get me started on "free tools." ChatGPT’s ToS doesn’t absolve you. It’s like using a stolen credit card and saying "the bank didn’t stop me." You still owe the debt.

Utah’s watermark rule? Brilliant. Not because it’s perfect, but because it forces acknowledgment. If we can’t even admit when something’s AI-generated, how can we ever take responsibility for it?

Liability is just a fancy word for blame game and everyone knows it. Vendors say we didn’t know. Users say it wasn’t me. Platforms say it’s not my job. But here’s the truth nobody wants to say-the system was designed to let everyone off the hook until someone got hurt. Now the courts are just cleaning up the mess after years of pretending this was innovation not negligence. The real problem isn’t AI. It’s that we built a world where responsibility is optional until it’s too late. We reward speed over safety. We celebrate disruption without asking who pays the price. And now we’re surprised when the house burns down. The fix isn’t more laws. It’s a cultural reckoning. We need to stop treating AI like a toy and start treating it like a weapon. Because that’s what it is when it’s used carelessly.

And yes I know I didn’t use a period. But you get the point.

Just use AI to help. Don’t let it do the work. Simple. If you’re using it to write a contract or make hiring calls you’re asking for trouble. Just say no. Done. Easy. No need for laws. Just common sense. People forget that. Think before you paste. That’s all.

Also label everything. Even if you’re just texting your buddy. Just say "AI wrote this." Boom. Problem solved.

So let me get this straight. We’re now legally required to treat AI like a coworker who never went to law school, can’t be fired, and will lie to you with 90% confidence. And if it messes up, you’re the one in court. Meanwhile, the vendor who trained it on scraped Reddit threads from 2012 is off the hook unless they used copyrighted cat memes. I’m not even mad. I’m impressed. This is the most absurd corporate theater since the 90s dot-com bubble. We’re building a legal system that punishes the user, rewards the vendor, and lets the platform hide behind a "we’re just a platform" sign while their AI writes hate speech in the background. It’s genius. Truly. I’d write a satire about it if I didn’t know it was already real.

I love how this whole conversation keeps circling back to responsibility-but never to empathy. The people using AI aren’t just faceless corporations. They’re small business owners, teachers, single parents trying to get a job application done after a 12-hour shift. The law should protect them too, not just punish them. Labeling is good. Training is good. But we need grace. We need pathways. A bakery owner shouldn’t need a legal team to know they can’t use AI to write a job ad that says "no seniors." They need a simple checklist. A template. A free tool that says "this might be biased." Not a lawsuit. Not a fine. A nudge. The real innovation isn’t in the AI-it’s in making sure the people using it aren’t left alone in the dark. We can build systems that are safe and fair. We just have to choose to care.

And yes, I’m still holding out hope that we will.

Bro I just use AI to write my grocery list and now I’m getting sued? I didn’t even know I was doing anything wrong. Just chill. Everything’s fine. Just keep going. We got this.