Generative AI can write emails, draft reports, and even answer customer questions - but left unchecked, it can also make dangerous mistakes. A medical chatbot suggesting the wrong treatment. A marketing tool generating offensive language. A financial report misstating revenue numbers. These aren’t hypotheticals. They’re real incidents that happened in 2023 and 2024. That’s why companies aren’t just deploying AI - they’re building human-in-the-loop systems to catch those errors before they go live.

What Human-in-the-Loop Really Means

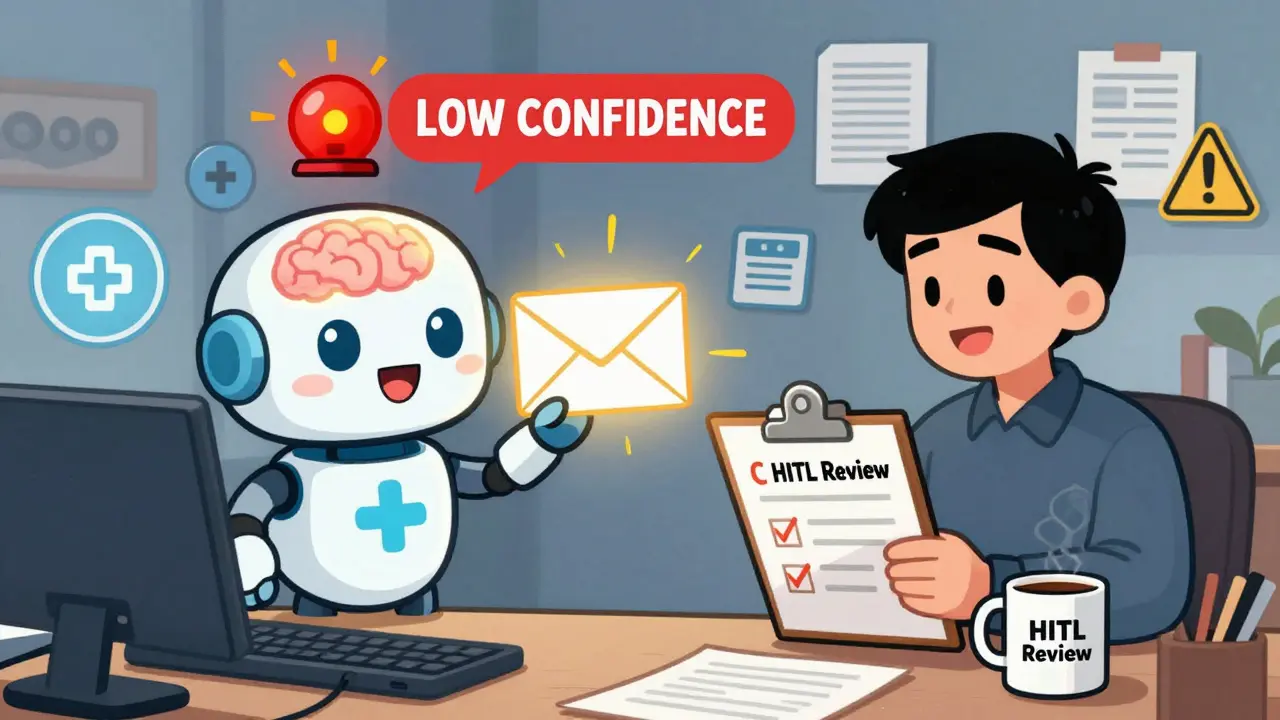

Human-in-the-loop (HITL) isn’t just having someone glance at an AI output. It’s a structured process where humans are part of the workflow - not after the fact, but during it. The AI does the heavy lifting: analyzing data, generating text, making predictions. But when confidence is low, when the stakes are high, or when the content touches regulated areas like healthcare or finance, the system stops and hands the decision to a person. This isn’t optional anymore. According to Forrester’s Q2 2024 report, 78% of enterprises now use some form of HITL for generative AI. In healthcare, that number jumps to 85% because of FDA rules. In finance, it’s 79% thanks to SEC Regulation AI-2023. If your company uses AI to talk to customers, process claims, or generate legal documents, you’re already in the game - whether you realize it or not.The Four-Stage HITL Workflow

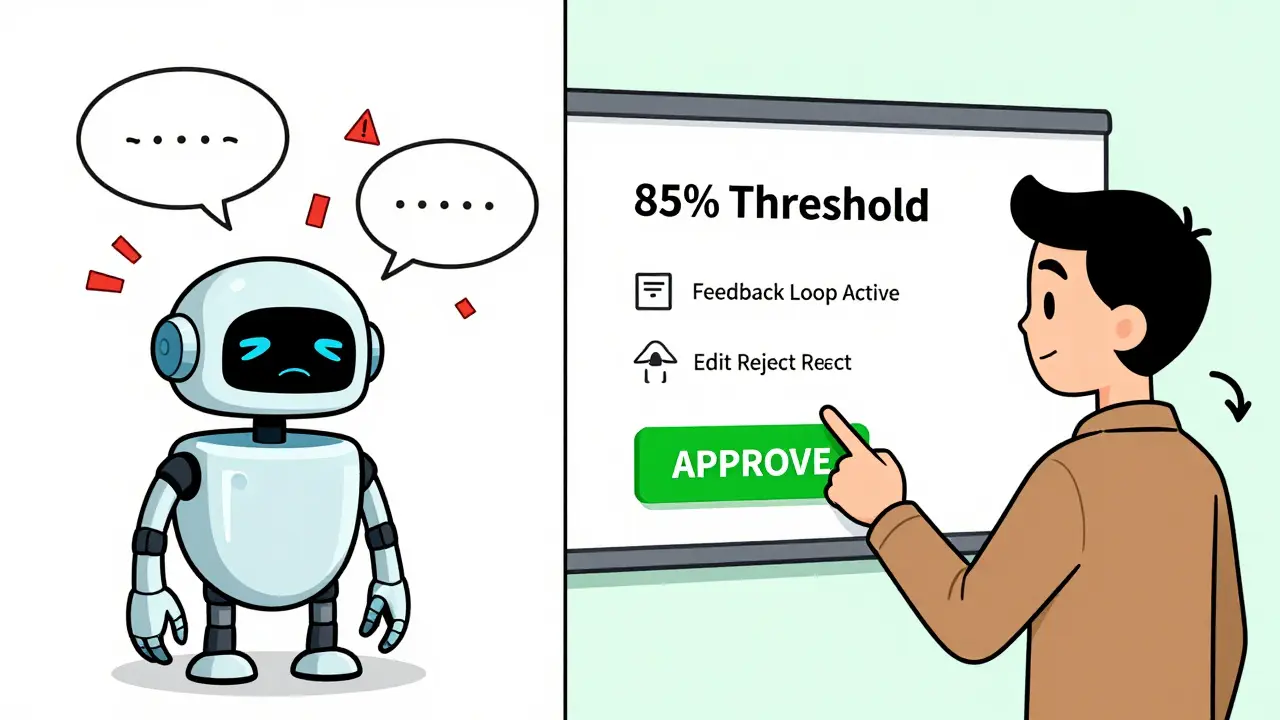

A well-designed HITL system follows a clear sequence. It’s not random. It’s engineered.- AI Processing with Confidence Scoring - The AI generates content and assigns a confidence score. This isn’t guesswork. Systems like AWS Step Functions use historical data to calculate how likely a response is accurate. A score below 85-90% triggers the next step.

- Automated Routing to Reviewers - Low-confidence outputs are automatically sent to human reviewers. High-confidence ones? They go straight to publication. No human needs to waste time on safe outputs.

- Structured Human Review - Reviewers don’t get a blank page. They get a pre-built interface with options: “Approve,” “Reject,” “Edit,” or “Escalate.” Some systems even highlight problematic phrases or suggest fixes based on past corrections.

- Feedback Loop to Retrain AI - Every human decision feeds back into the model. If reviewers consistently reject certain types of responses, the AI learns to avoid them. This is how the system gets smarter over time.

HITL vs. HOTL: The Difference That Matters

Don’t confuse human-in-the-loop with human-on-the-loop. They sound similar, but they’re worlds apart. Human-on-the-loop (HOTL) means humans watch from the sidelines. They step in only if something goes wrong - like a pilot taking over when autopilot fails. But generative AI doesn’t fail like a plane. It drifts. It quietly gets worse. A HOTL system might miss subtle bias, tone issues, or factual inaccuracies because no one is actively checking every output. HITL assumes errors are inevitable. It builds checkpoints. It doesn’t wait for disaster. It prevents it. KPMG’s global head of AI, Tahirkeli, put it bluntly: “Everyone in our firm serves as a human in the loop when it comes to AI.” That means no client-facing AI output leaves the system without review. No exceptions. That’s the standard now.

Where HITL Works Best - and Where It Doesn’t

Not every use case needs the same level of oversight. High-stakes areas that demand HITL:- Healthcare communications (patient instructions, discharge summaries)

- Financial reporting and customer disclosures

- Legal document drafting (contracts, compliance letters)

- Customer service responses in regulated industries

- HR communications (hiring feedback, policy updates)

- Internal draft summaries

- Content brainstorming

- Non-public marketing copy

- Code comments or documentation

Real Results: How Much Does HITL Actually Improve Things?

Numbers don’t lie. Tredence’s 2024 case studies show properly built HITL systems reduce AI errors by 63-78%. That’s huge. But here’s the kicker: they still maintain 40-60% efficiency gains over fully manual processes. You’re not trading speed for safety - you’re getting both. Average review time? 22 to 37 seconds per item. That’s less than a minute. For a company handling 10,000 AI-generated customer replies a week, that’s 6-10 hours of human time - a small price to avoid a PR disaster or regulatory fine. And the feedback loop? It works. KPMG trained their staff on “Trusted AI” standards. After three months, review errors dropped by 31%. The system learned from their corrections. The humans learned from the AI’s suggestions. It became a two-way street.Why Most HITL Projects Fail

It’s not the tech. It’s the people. Professor Fei-Fei Li’s 2024 Stanford study analyzed 127 enterprise HITL implementations. Three failure patterns stood out:- Inconsistent review standards - 68% of teams had no clear guidelines. One reviewer flagged a phrase as toxic. Another approved it. Chaos.

- Inadequate training - 42% of reviewers got no formal training. They were handed a dashboard and told to “use your judgment.”

- Poor feedback integration - 57% of systems collected human corrections but didn’t feed them back into the AI. The model kept making the same mistakes.

What You Need to Build a Working HITL System

You don’t need a team of engineers. But you do need these five things:- A workflow engine - AWS Step Functions, Azure Logic Apps, or Google Cloud Workflows. These handle the routing, notifications, and state management. Don’t build your own. It won’t scale.

- Confidence thresholds - Start at 85%. Test. Adjust. Most companies need 2-3 rounds to get this right.

- A review interface - Simple. Clear. No clutter. Buttons for “Approve,” “Reject,” “Edit,” “Escalate.” Add AI-suggested edits if you can.

- Identity and audit logs - Who reviewed what? When? Why? This isn’t just for compliance - it’s for learning.

- A feedback pipeline - Every human decision must retrain the model. Automate this. Don’t let it become a manual spreadsheet.

The Future: Fading HITL, Not Going Away

Some people think HITL is temporary. That AI will get so good, we won’t need humans anymore. That’s not true. Gartner predicts that by 2026, 90% of enterprise generative AI applications will require formal HITL processes. Why? Because regulations won’t change. Reputational risk won’t disappear. And humans still need to make judgment calls - especially around ethics, tone, and context. The future isn’t zero humans. It’s smarter humans. AWS’s October 2024 update introduced “adaptive confidence scoring.” Instead of a fixed 85% threshold, the system now adjusts based on content type. A product description? Maybe 80% is fine. A clinical note? 95%. That cut unnecessary reviews by 37%. And now there’s “human-in-the-loop reinforcement learning.” Humans don’t just review - they train. When a reviewer corrects an AI output, the system learns not just what to avoid, but why. Early results show review volume dropping 22-29% over six months. The goal isn’t to eliminate humans. It’s to make them focus on what only humans can do: understand nuance, weigh ethics, and make decisions when there’s no clear rule.Final Thought: HITL Isn’t a Feature. It’s a Policy.

You can’t outsource accountability to an algorithm. Not anymore. If your company uses AI to interact with customers, make decisions, or generate official content - you have a responsibility. HITL isn’t a technical add-on. It’s your risk management strategy. It’s your compliance framework. It’s your ethical guardrail. Start with one use case. Train your team. Document your rules. Build the feedback loop. And don’t wait for a mistake to happen before you act. The best AI systems aren’t the ones that work without humans. They’re the ones that know exactly when to call them in.What’s the difference between human-in-the-loop and human-on-the-loop?

Human-in-the-loop (HITL) means humans are actively involved in every decision cycle - reviewing, approving, or editing AI outputs before they’re used. Human-on-the-loop (HOTL) means humans monitor the system and only step in if something goes wrong. HITL prevents errors. HOTL reacts to them. For generative AI, HITL is the safer, more reliable approach.

Do I need special software to implement HITL?

You don’t need custom code, but you do need a workflow orchestration tool. AWS Step Functions, Azure Logic Apps, and Google Cloud Workflows are designed for this. They handle routing, notifications, state tracking, and integration with AI models. Trying to build this from scratch leads to brittle, unmaintainable systems. Use the tools built for the job.

How do I set the right confidence threshold for human review?

Start at 85%. Run a pilot. Track how many errors slip through versus how many reviews are unnecessary. If too many bad outputs get approved, lower the threshold. If reviewers are overwhelmed, raise it. Most companies need 2-3 rounds of testing to find the sweet spot. Don’t guess - measure.

Can AI ever replace humans in the loop entirely?

In low-risk, non-regulated areas - maybe. But for any use case involving customers, compliance, safety, or ethics, humans will always be needed. Gartner predicts 100% of regulated industries will require human review through 2030. The goal isn’t to remove humans - it’s to make them focus on the hardest decisions, not routine checks.

What’s the biggest mistake companies make with HITL?

Failing to standardize review criteria. Without clear rules, reviewers disagree. One person approves what another rejects. That creates legal risk and erodes trust in the system. The fix? Document your standards. Train your team. Create a shared library of approved and rejected examples. Make it easy to follow the rules.

Oh wow another ‘human-in-the-loop’ manifesto. So let me get this straight - we’re paying humans $30/hour to stare at AI-generated customer replies for 22 seconds each? That’s not oversight, that’s glorified proofreading with a side of existential dread. And don’t get me started on ‘feedback loops’ - half these teams don’t even track what got rejected, they just hit approve out of boredom. This isn’t AI governance, it’s a corporate theater production with PowerPoint as the star.

The structural integrity of human-in-the-loop systems is not merely a technical consideration, but a profound epistemological imperative. When we delegate decision-making authority to algorithmic systems - however sophisticated - we abdicate moral responsibility under the illusion of efficiency. The four-stage workflow described herein is not merely optimal; it is ethically non-negotiable. To omit human adjudication in regulated domains is not innovation, but negligence dressed in machine learning jargon. The data from Forrester and KPMG are not statistics - they are moral indictments.

Ugh. So we’re just gonna keep paying people to babysit AI now? Like, come on. If your AI is making mistakes that need a human to fix, why are you even using it? This whole thing feels like a consultant’s dream. $200k for a dashboard with four buttons? Please. I’ve seen interns do better with Google Docs.

People think this is about safety but its really about control. The real reason companies use HITL is so they can say ‘someone approved this’ when the lawsuit comes. No one cares about ethics they care about liability. And the ‘feedback loop’? Most of the time its just a black hole. Reviewers correct stuff but the AI never learns because no one has time to clean the data. Its all theater. I’ve seen it. I’ve lived it. And now they want us to pay for the show again.

Love this breakdown - especially the tiered review model from Parexel. It’s genius. Instead of drowning reviewers in everything, you let junior staff handle the low-risk stuff and save the heavy hitters for the clinical or legal deep dives. And the feedback loop? That’s where the magic happens. I’ve seen teams go from ‘what even is this button’ to spotting AI bias in 5 seconds flat after a 30-minute workshop with real examples. It’s not about more humans - it’s about smarter human design. Also, please stop calling it ‘human-on-the-loop’ - that’s just lazy jargon. HITL is the future, and it’s not going away because we’re scared of work. It’s because we’re finally learning how to work *with* machines, not just at them.

Biggest win here is adaptive confidence scoring. Why check a product description at 95% when it’s fine at 80%? Makes total sense. We did this at my last job with internal docs and cut review time in half. No drama. No panic. Just smarter routing. Also don’t forget the audit logs - they saved our ass during that one compliance audit. Just sayin