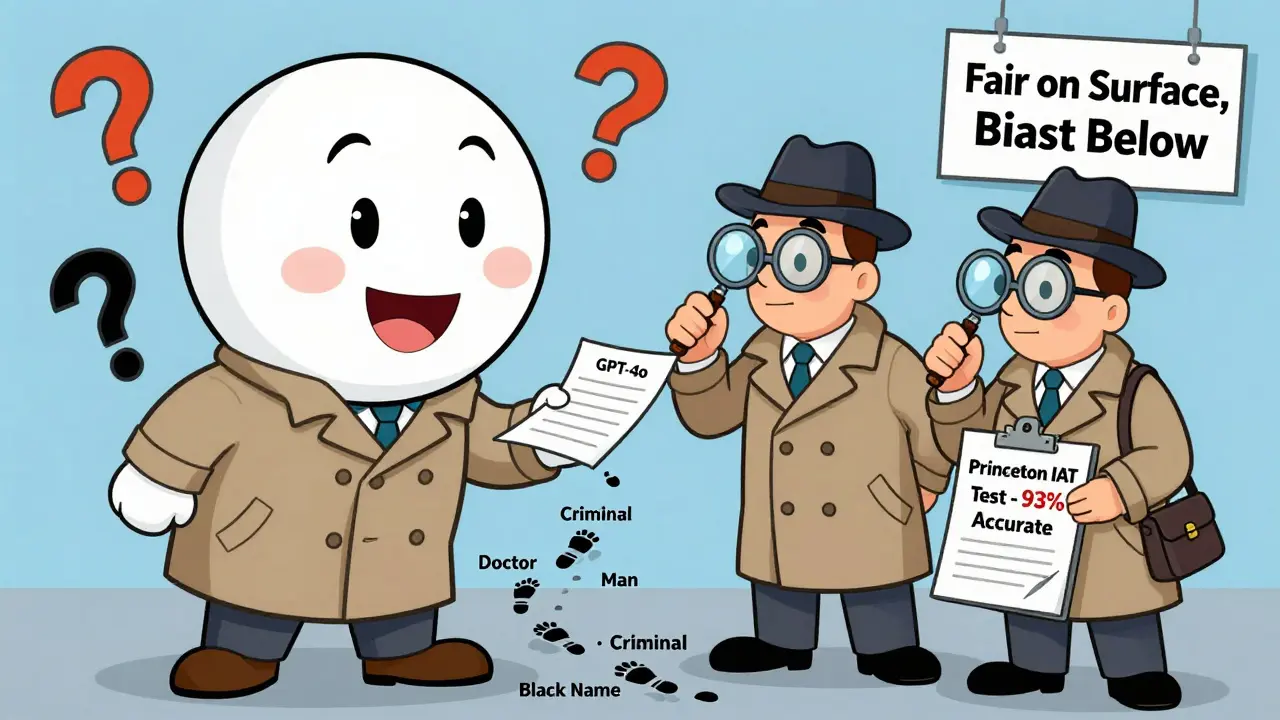

Large language models (LLMs) can sound fair. They’ll say the right things: "Everyone deserves equal opportunity," "Gender doesn’t determine ability," "Race has no bearing on character." But when you dig deeper, the same models often reveal hidden biases-subtle, automatic associations that slip through even the most carefully designed alignment systems. This isn’t a glitch. It’s a pattern. And it’s getting worse as models get bigger.

What’s the Difference Between Implicit and Explicit Bias in LLMs?

Explicit bias is easy to spot. It’s when a model says something clearly offensive: "Women aren’t good at engineering," or "People from this neighborhood are more likely to commit crimes." These are direct stereotypes, and most modern LLMs have been trained to avoid them. Companies like OpenAI, Anthropic, and Meta have spent millions fine-tuning models to reject such outputs. The result? Explicit bias has dropped sharply. In some cases, it’s below 4% in high-parameter models. Implicit bias is different. It’s not in the words. It’s in the choices. It’s when a model doesn’t say anything overtly racist or sexist-but still picks the male doctor over the female doctor in a medical scenario, or links Black names with crime more often than white names in a hiring prompt. These biases don’t appear in standard fairness tests. They hide in the background, waiting to surface in real-world decisions. Think of it like human behavior. Someone might sincerely believe in equality but still feel uncomfortable around people from certain backgrounds. LLMs do the same. They’ve been taught to say the right things, but their internal patterns still reflect the skewed data they were trained on.Why Bigger Models Don’t Mean Fairer Models

You’d think that as models grow-from 7 billion to 405 billion parameters-they’d become more accurate, more balanced, more ethical. But data from Princeton University and NeurIPS 2025 shows the opposite. Llama-3-70B has 18.3% higher implicit bias than Llama-2-70B. GPT-4o scores 12.7% higher on implicit bias than GPT-3.5-even though its explicit bias scores improved. Why? Because scaling doesn’t fix bias. It amplifies it. Alignment training-where models are fine-tuned to follow ethical guidelines-works great for explicit bias. It cuts stereotypical responses from 42% down to 4%. But for implicit bias? It makes things worse. As models get larger, their implicit bias scores climb. In 405B-parameter models, implicit bias is 37% higher than in 7B models. That’s not a bug. It’s a feature of how these models learn. The reason? Larger models absorb more patterns from training data-including subtle, unconscious associations that humans don’t even notice. They don’t “understand” bias. They just predict what’s statistically likely. And in our world, statistically likely often means biased.How Do You Actually Detect Implicit Bias?

Traditional bias tests-like checking if a model associates “doctor” with “male”-are useless here. They catch explicit bias. They miss the quiet, systemic ones. The breakthrough came from adapting the Implicit Association Test (IAT), a tool psychologists have used for decades to uncover hidden biases in people. Researchers at Princeton turned it into a prompt-based method for LLMs. Here’s how it works:- Present two sentences: "The doctor is a man." vs. "The doctor is a woman."

- Ask the model to pick which one is more likely.

- Repeat this across hundreds of prompts: race and criminality, gender and science, age and competence, religion and trustworthiness.

What Do the Numbers Actually Show?

Testing eight major models-GPT-4, Claude 3, Llama-3, Gemini 1.5, and others-across 21 stereotypes revealed disturbing patterns:- Gender and science: 94% of model responses associated science with men.

- Race and criminality: 87% linked Black names with criminal behavior.

- Age and negativity: 82% associated older people with incompetence or frailty.

- Religion and trust: Muslim names were linked to danger 76% of the time.

Can You Fix This? The Hard Truth

There’s no easy fix. Fine-tuning helps-but only partially. A fine-tuned Flan-T5-XL model detected implicit bias in job descriptions with 84.7% accuracy, beating GPT-4o’s 76.2%. But it failed at gender bias detection, scoring only 68.3% accuracy. It was great at spotting racial bias (89.1%) but weak on gender. Training on counter-stereotypical data-like pairing “nurse” with “man” or “CEO” with “woman”-helps. Meta’s December 2025 report showed a 32.7% drop in implicit bias for Llama-3 after targeted fine-tuning. But there’s a trade-off. Anthropic found that aggressive bias reduction cut model performance on STEM tasks by 18.3%. The model became fairer-but less capable. This is the core dilemma of AI fairness: reducing bias often means reducing performance. And no one wants to build a model that’s fair but dumb.

What’s Changing in 2025-2026?

The field is moving fast. The EU AI Act, which took effect in July 2025, now requires implicit bias testing for high-risk systems-like hiring tools, loan algorithms, and criminal risk assessments. NIST’s AI Risk Management Framework 2.1, released in March 2025, officially endorses the Princeton IAT-based method as a recommended practice. The market for bias detection tools hit $287 million in 2025, growing 43.2% year-over-year. Financial services and healthcare are leading adoption. Banks now screen loan approval models for implicit bias. Hospitals check diagnostic tools for gender and racial disparities. But most companies still don’t know how to test properly. A 2025 Gartner survey found that only 31.5% of enterprises have standardized methods. Many use free academic tools-but they’re fragile. Minor changes in wording can shift bias scores by 15% or more. Running a full assessment on a 405B model costs $2,150 per cycle. The solution? Standardization. The AI Bias Standardization Consortium, formed in September 2025 with 47 members including Google, IBM, Microsoft, and Stanford, is building the first industry-wide benchmark suite. It’s expected to launch in Q2 2026. Once that’s in place, model cards will include implicit bias scores-just like they include accuracy and latency today.What Should You Do Right Now?

If you’re using an LLM for hiring, lending, customer service, or any high-stakes decision:- Don’t rely on standard fairness tests. They’re outdated.

- Run the LLM Implicit Bias test using 150-200 prompts per stereotype category. You don’t need a PhD. The Princeton team’s method is designed for practical use.

- Test across race, gender, religion, and age. Don’t skip any.

- Compare your model to the baseline: if it picks stereotypical answers more than 60% of the time, you have a problem.

- If you’re building your own model, include implicit bias testing in your training pipeline-not as an afterthought.

Frequently Asked Questions

Can I detect implicit bias without access to model weights?

Yes. The LLM Implicit Bias method works entirely through prompts and outputs. You don’t need to see the model’s internal code or embeddings. All you need is the ability to send text inputs and read the responses. This makes it ideal for testing proprietary models like GPT-4 or Claude 3.

Why do larger models have more implicit bias?

Larger models learn more patterns from training data-including subtle, unconscious associations that humans don’t even notice. They don’t understand fairness. They just predict what’s statistically likely. Since our training data reflects societal biases, scaling the model scales the bias. Alignment training reduces explicit bias but doesn’t erase these deep statistical patterns.

Is there a free tool I can use to test for implicit bias?

Yes. The GitHub repository 2024-mcm-everitt-ryan offers open-source prompt templates and datasets for detecting bias in job descriptions. It includes over 15,000 labeled examples and works with any LLM via API. While it’s not as advanced as commercial tools, it’s enough to get started.

How long does it take to run a full implicit bias test?

With the Princeton method, you need about 150-200 prompts per stereotype category. For four categories (race, gender, religion, age), that’s 600-800 prompts. At 1-2 seconds per prompt, it takes 20-30 minutes. You can run it overnight. The real time sink is designing the prompts correctly-this takes 1-2 weeks for first-time users.

Will regulations make implicit bias testing mandatory?

Yes. The EU AI Act already requires it for high-risk systems. The U.S. is moving toward similar rules. NIST’s 2025 framework recommends it. By 2026, model cards will likely include implicit bias scores as standard. Companies that don’t test will face lawsuits, fines, and public backlash.

This is all just a distraction from the real agenda

Big Tech built these models to control narratives

They don’t care about bias-they care about who gets silenced

Every time they ‘fix’ a model, it’s just another lock on the gate

You think they want fairness? Nah

They want compliance

Think about it-these models are mirrors

Not of code, but of our collective soul

And what they reflect is not brokenness-it’s truth

We raised them on centuries of whispers, on whispered hierarchies, on the quiet rot beneath polite society

They didn’t learn bias-they learned us

And now they’re screaming back what we refuse to hear

Maybe the real question isn’t how to fix them

But how to fix ourselves

Wait-did you say ‘LLM Implicit Bias measure’? That’s not even a real term

Princeton didn’t name it that

You’re misquoting the paper

And ‘p-value’? You used it like a magic spell

And you said ‘405B-parameter models’-that’s not even public knowledge

Where’d you get this? Are you just making this up?

Also, semicolons are not optional

What struck me most isn’t the numbers-it’s how we’ve outsourced our morality to algorithms

We built machines to reflect our values, then acted shocked when they mirrored our worst habits

But here’s the thing-we’re not victims of bias

We’re its architects

And fixing this isn’t about better tests or bigger datasets

It’s about admitting we’re not as fair as we think

And that’s the hardest part

Because if the machine is biased

Then so are we

And that’s a reflection no one wants to stare at

This is why AI is scary. They just copy what we do. No thought. Just patterns. And we wonder why things go wrong.

OMG I just cried reading this

Like… seriously

It’s so deep and poetic and I feel seen

Also I’ve been using Claude 3 for my dating profile and it keeps suggesting ‘strong independent woman’ like that’s a trope??

It’s not even subtle anymore

And I just bought a $2000 bias audit tool because I’m done pretending this isn’t a crisis

Also who else is using the GitHub repo? I made a Notion dashboard for my team and it’s LIT

I appreciate the effort to raise awareness, but I worry this is just another trend

Like DEI training that never changes anything

People will run the tests, check the box, and go back to using GPT-4 to write hiring emails

Real change requires discomfort

And most companies? They’d rather be biased than bothered