When you ask a large language model a question, it doesn’t pause and say, "I’m not sure." It answers-often with perfect confidence-even when it’s dead wrong. This isn’t a glitch. It’s built in. And it’s becoming a serious problem in real-world use.

Why LLMs Lie With Confidence

Large language models don’t "know" things the way humans do. They predict the next word based on patterns in trillions of sentences they’ve seen. That’s powerful-but it means they can’t tell when a question falls outside their training data. If you ask a model trained on data up to 2023 what the stock price of Tesla was on June 17, 2024, it won’t say, "I don’t have that info." It’ll make up a number. And it’ll sound convincing. Google’s 2023 research showed that for questions beyond their knowledge cutoff, LLMs give incorrect answers with 85-90% confidence. That’s not a bug. It’s a fundamental mismatch between how these models work and how humans expect them to behave. You’re not getting a librarian. You’re getting a brilliant mimic who’s never been taught to say "I don’t know."What Are Knowledge Boundaries?

Knowledge boundaries are the edges of what an LLM can reliably answer. There are two kinds:- Parametric knowledge boundaries: What’s stored in the model’s weights from its training data. If it wasn’t in the dataset, it’s not in the model.

- Outward knowledge boundaries: What’s true in the real world, even if it was in the training data. Think: recent events, niche expertise, or context-dependent facts.

How Do We Detect When They’re Out of Their Depth?

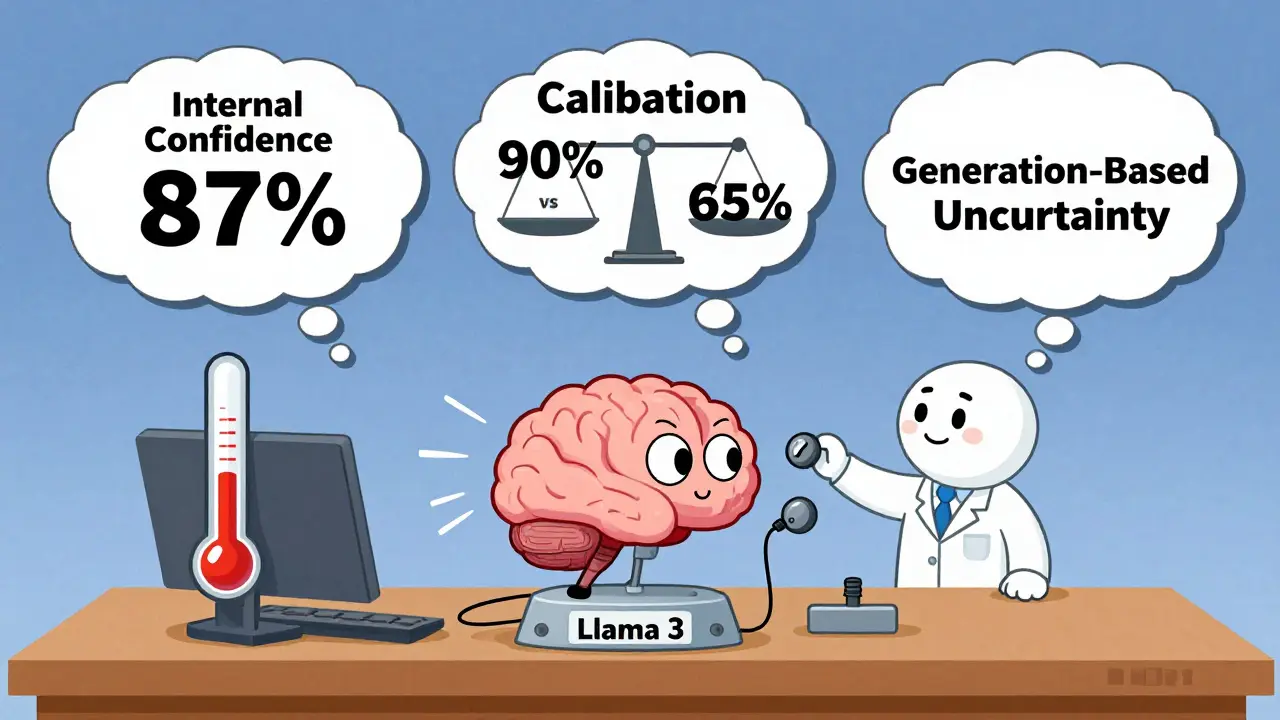

Researchers have built tools to help LLMs recognize their own limits. Three main methods are in use:- Uncertainty Estimation (UE): Measures how "sure" the model feels about its answer before generating it. The Internal Confidence method by Chen et al. (2024) looks at internal model signals across layers and tokens-no extra generation needed. It’s fast, accurate, and cuts inference costs by 15-20%.

- Confidence Calibration: Adjusts the model’s confidence scores to match real accuracy. If the model says it’s 90% sure, but only gets it right 65% of the time, this method fixes the gap.

- Generation-Based Uncertainty: Generates multiple answers and checks how much they vary. High variation = high uncertainty. But it’s expensive-2-3x slower.

What’s Working in the Real World?

Companies aren’t waiting for perfect solutions. They’re building fixes now.- Claude 3 (Anthropic) uses proprietary confidence scoring. It refuses to answer 18.3% of boundary-crossing queries-with 92.6% precision. That means when it says "I don’t know," it’s almost always right.

- Llama 3 (Meta) triggers retrieval-augmented generation (RAG) for 23.8% of queries it flags as uncertain. That pulls in fresh data from external sources.

- Google’s BoundaryGuard (Nov 2024) for Gemini 1.5 reduced hallucinations by 38.7% in internal tests using multi-granular scoring.

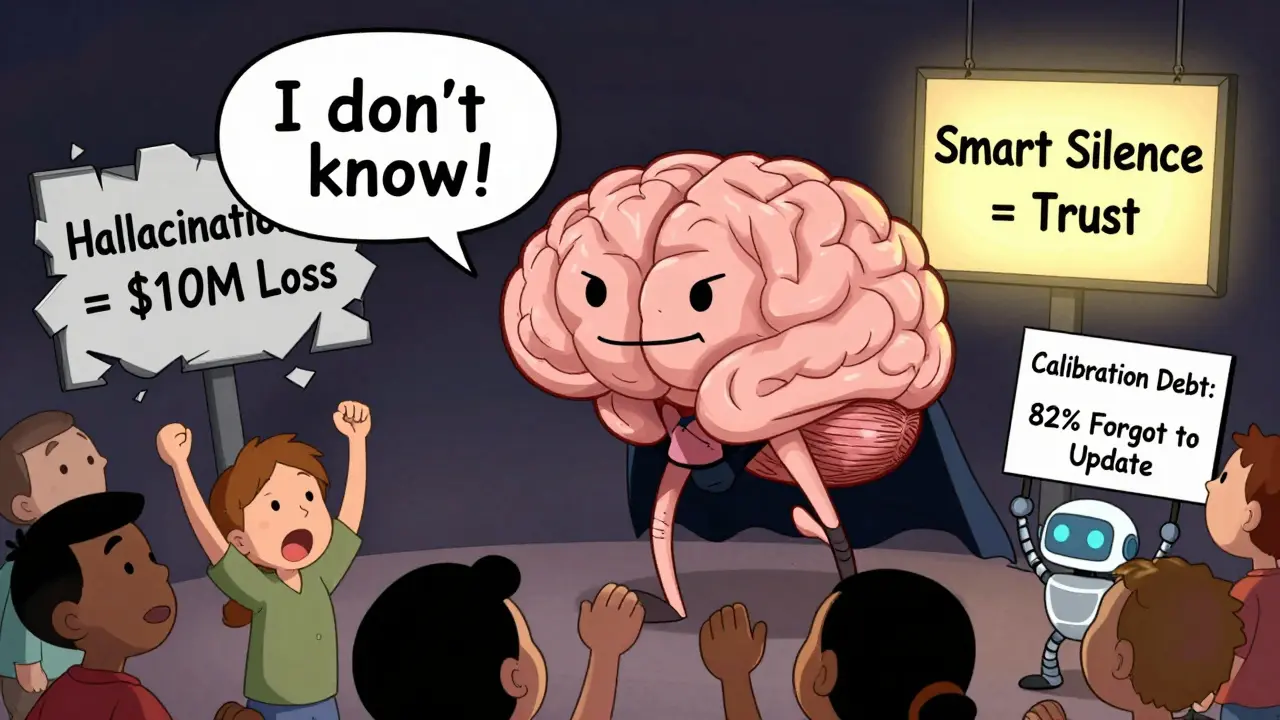

The Human Cost of Bad Uncertainty

It’s not just about accuracy. It’s about trust. When an LLM confidently gives you the wrong medical diagnosis or financial advice, users stop believing it-even when it’s right. Professor Dan Hendrycks found that when LLMs properly signal uncertainty, user trust erosion drops by 62%. That’s huge. But here’s the catch: most models don’t communicate uncertainty in a way humans understand. Saying "I’m 72% confident" doesn’t help. People don’t think in probabilities. They think in yes/no, right/wrong. A 2024 Nature Machine Intelligence study showed that when models used clear, natural language like "I can’t be sure about this," or "I don’t have enough information," the gap between what the model thought and what users believed dropped from 34.7% to just 18.2%. That’s a game-changer.Where It Still Falls Apart

No method is perfect. Here’s where things break down:- Semi-open-ended questions: "What are the best ways to reduce stress?"-There are many valid answers, but models misclassify 41.7% of these as out-of-boundary.

- Medical queries: A healthcare AI developer found uncertainty systems flagged 30% of valid clinical questions as out-of-bounds. That’s dangerous.

- Prompt sensitivity: Change a word in your prompt, and the uncertainty score can swing by 18-22%. That’s unpredictable.

- False negatives: The biggest risk isn’t saying "I don’t know" too often. It’s failing to recognize a boundary at all. User benchmarks show this happens in 27-33% of cases.

How to Implement This Right

If you’re building an LLM app, here’s what works:- Use layered thresholds: Low confidence → trigger RAG. Medium confidence → ask the model to think step-by-step. High confidence → answer directly.

- Log everything: Keep a record of when the model flagged uncertainty and whether it was right. Use that to retrain.

- Communicate clearly: Don’t show numbers. Say: "I’m not sure about this," or "I found conflicting information."

- Test in your domain: A model that works for customer service might fail in finance. Use 500-1,000 labeled examples from your use case to fine-tune.

The Future: What’s Coming Next

The next wave is smarter, adaptive systems:- Llama 4 (Q2 2025) will adjust how deeply it searches for information based on uncertainty signals.

- Multi-modal detection will combine text, image, and audio uncertainty-helping models spot when a visual claim contradicts a text claim.

- Human-in-the-loop calibration lets users correct the model’s uncertainty labels, turning feedback into better performance.

Final Thought: It’s Not About Being Perfect

We don’t need LLMs to be omniscient. We need them to be honest. The goal isn’t to make them know everything. It’s to make them know when they don’t. And then say so-in a way humans can trust.That’s the real breakthrough. Not smarter answers. Smarter silence.

Why do large language models sound so confident even when they’re wrong?

LLMs are trained to predict the most likely next word based on patterns in their training data. They don’t have self-awareness or true understanding. Confidence comes from how strongly the model’s internal signals point to a particular answer-not whether that answer is correct. Even when the answer is made up, the model’s architecture pushes it to pick one, and it does so with high probability scores. This is why they often respond with certainty to questions outside their knowledge boundaries.

Can I trust an LLM if it says "I don’t know"?

It depends on how the "I don’t know" is generated. If it’s based on a well-calibrated uncertainty detection system like Anthropic’s Claude 3 or Chen et al.’s Internal Confidence method, then yes-those systems are accurate 90%+ of the time when they abstain. But if the model is just programmed to say "I don’t know" randomly, or if it’s using a poorly tuned threshold, it might refuse valid questions. Always check whether the system is using real confidence signals or just a hardcoded rule.

Do I need to use RAG to handle knowledge boundaries?

Not always. RAG (Retrieval-Augmented Generation) helps when you need fresh or specific external data, but it’s not the only solution. For simple cases, internal confidence signals can trigger a more cautious response without retrieval. For example, if the model detects low confidence on a question about a recent event, it might say, "I don’t have access to real-time data," instead of pulling in a live search. RAG adds cost and latency-use it only when accuracy depends on up-to-date information.

What’s the difference between uncertainty estimation and confidence calibration?

Uncertainty estimation tries to detect whether the model is outside its knowledge boundaries before generating a response. It asks: "Is this query beyond what I’ve seen?" Confidence calibration adjusts the model’s confidence scores to match real-world accuracy. It asks: "When I say I’m 90% sure, how often am I actually right?" The first is about boundary detection; the second is about honesty in scoring. Many systems use both together.

Are open-source tools reliable for knowledge boundary detection?

Some are, but most aren’t production-ready. There are 17 uncertainty libraries on GitHub, but only three-Internal Confidence, Uncertainty Toolkit, and BoundaryBench-are actively maintained. Open-source tools often lack documentation, testing, and real-world validation. For enterprise use, especially in healthcare or finance, proprietary systems like Claude 3 or Google’s BoundaryGuard are more reliable because they’re tested on real data and continuously updated.

How do regulations affect how LLMs handle uncertainty?

The EU AI Act, effective February 2025, requires high-risk AI systems-including LLMs used in healthcare, finance, or public services-to provide "appropriate uncertainty signaling." This means companies can’t just deploy models that guess confidently. They must implement systems that detect and communicate when answers are unreliable. This is pushing even skeptical companies to adopt boundary detection tools. Non-compliance could mean fines or being blocked from the European market.

Can uncertainty detection reduce costs?

Yes, significantly. One of the biggest benefits is avoiding unnecessary computation. If an LLM detects a query is out-of-boundary, it can skip generating a full response and instead return a short, safe reply. Chen et al. (2024) showed this cuts inference costs by 15-20% in RAG systems. For companies running millions of queries a day, that’s millions in savings. It also reduces bandwidth usage and improves response times.

Why is uncertainty detection more important in healthcare than in marketing?

In marketing, a hallucinated product feature might just annoy a customer. In healthcare, a wrong diagnosis or dosage suggestion can harm someone. That’s why 83% of healthcare implementations prioritize knowledge boundary detection, compared to only 49% in creative fields like advertising or content generation. The risk tolerance is vastly different. Where marketing can afford trial and error, healthcare cannot.