Why Your LLM’s Performance Depends on What You Feed It

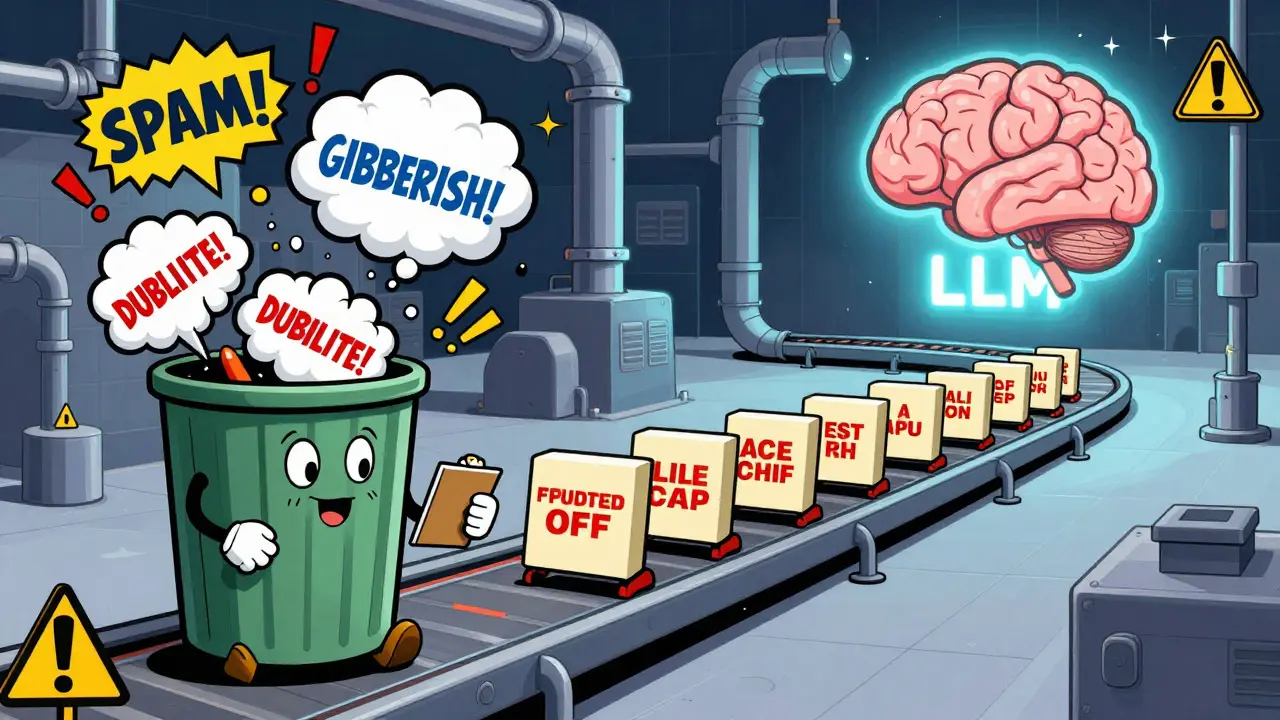

Training a large language model isn’t just about throwing more data at it. It’s about feeding it the right data. A model trained on messy, low-quality text won’t just perform poorly-it might start hallucinating facts, repeating toxic patterns, or failing basic reasoning tasks. Researchers found that even a small amount of bad data-just 15%-can drag down model accuracy by up to 37% on benchmarks like MMLU and GSM8K. That’s not a minor glitch. That’s a broken system.

So how do you stop garbage from slipping into your training pipeline? The answer isn’t one magic tool. It’s a layered defense: heuristic filters and model-based filters working together. Most teams use both, and for good reason. One catches the obvious junk. The other spots the subtle poison.

Heuristic Filters: The First Line of Defense

Think of heuristic filters as the bouncers at the club. They don’t care about the nuance of your conversation-they check your ID and make sure you’re not carrying a weapon. These are simple, fast rules applied to every piece of text before anything else.

- Documents under 50 words or over 5,000 words? Pitch them. Too short means useless snippets. Too long often means scraped, broken HTML or copy-paste chaos.

- Average word length below 3.5 characters? That’s spam or gibberish. Above 6.5? Could be code, symbols, or corrupted text.

- Less than 75% alphabetic characters? Probably a page full of ads, tables, or non-text content.

- Duplicate content? If two documents are 95% identical, keep one. If they’re 98% identical? Delete both. Fuzzy matching catches paraphrased copies too.

- Language mix? If more than 10-15% of a document isn’t your target language, toss it. A mix of English and Mandarin in a dataset meant for English models creates noise, not learning.

These rules remove 18-22% of raw data quickly and cheaply. On a 10TB dataset, that’s 1.8-2.2TB gone before you even turn on your GPU. That’s a huge savings in time and money.

But here’s the catch: heuristics are blunt. A technical manual with 4,200 words and dense code snippets? It’s high quality, but it might get tossed because it’s over 5,000 characters. That’s called overfiltering. And it’s a real problem-8-12% of good content gets lost this way. That’s why you never stop at heuristics.

Model-Based Filters: Smarter, But Costlier

Once the obvious junk is gone, you need something smarter. That’s where model-based filters come in. These are AI models trained to recognize quality-not by rules, but by patterns learned from human-labeled data.

There are three main types:

- n-gram classifiers like fastText: These are lightweight. They look at sequences of words-bigrams, trigrams-and compare them to known good/bad patterns. They’re fast: 1,200 documents per second on a single A100 GPU. But they’re not great at understanding context. Accuracy? Around 80%. They catch the obvious, but miss the sneaky stuff.

- BERT-style classifiers: These understand sentence structure. They’re slower-only 85-120 docs per second-but 28-35% more precise. They can tell if a paragraph is logically coherent or just a random string of words. Used by AWS and others, they’re the sweet spot for mid-sized datasets.

- LLM-as-judge: This is the fancy version. You feed a document to a powerful LLM and ask it: “Is this high-quality training data?” Models like NVIDIA’s Nemotron-4-340B rate text across five dimensions: helpfulness, correctness, coherence, complexity, and verbosity. They match human judgment 92-95% of the time. But they’re expensive. On 8 A100s, you’re processing 15-25 documents per minute. For a trillion-token dataset? Forget it.

Here’s the trade-off: n-gram filters are cheap but shallow. BERT is balanced. LLM-as-judge is precise but slow. Most teams use them in sequence: heuristics first, then n-gram, then BERT for the final cut. LLM-as-judge? Reserved for the top 5% of data you’re absolutely sure you want to keep.

The Cascaded Approach: How Real Teams Do It

There’s no single best method. The best method is stacking them.

According to a 2024 survey of 219 AI teams worldwide, 73% use a cascade:

- Step 1: Heuristics - Remove 18-22% of data. Fast, cheap, no model needed.

- Step 2: n-gram classifier - Remove another 12-15%. Catches patterns the rules missed.

- Step 3: BERT or specialized model - Remove 5-8% more. Final quality pass.

This three-stage pipeline achieves 89-92% overall data quality. Single-method approaches? Heuristic-only gives you 75-78%. LLM-only? You’d spend $20,000 to filter 10TB of data. That’s not a pipeline-it’s a budget disaster.

Take the FineWeb-Edu classifier from Carnegie Mellon. It’s a BERT-style model trained only on educational content. It spots textbook passages, lecture notes, and academic papers with 87% precision. It processes 450GB per hour on an H100. That’s not just filtering-it’s curation.

The Hidden Bias Problem

Here’s something no one talks about enough: your filters are biased.

Models trained on English web data think “good writing” means Western, academic, formal tone. They downgrade content from non-Western sources-blogs from India, forums from Brazil, technical docs in Arabic-by 22-27%. That’s not a glitch. That’s a systemic flaw.

Dr. Emily M. Bender from the University of Washington calls this “the illusion of objectivity.” Just because a filter says a text is low-quality doesn’t mean it’s bad. It might just be different.

And it’s not just language. Filters trained on Reddit and Wikipedia miss the nuance of medical, legal, or financial writing. A doctor’s note might look “incoherent” to a general-purpose model because it uses abbreviations and shorthand. But it’s perfectly valid.

The fix? Train your filters on diverse data. Include non-English, non-Western, and domain-specific examples. And always sample human-reviewed data from the filtered output. If you’re not checking, you’re amplifying bias.

Human-in-the-Loop: The Final Safety Net

Even the best filters make mistakes. That’s why 68% of teams add human review.

They don’t read everything. That’s impossible. Instead, they sample 0.5-1.5% of the filtered data-say, 5,000 documents from a 1 million document set-and send them to human raters. Scale AI charges $3,200-$4,800 per million documents for this. It’s expensive, but it’s worth it.

Why? Because humans catch things machines miss: sarcasm, cultural context, subtle contradictions, or documents that look odd but are actually perfect for training.

Healthcare and finance teams do this even more rigorously. Medical LLMs require 99.2% factual accuracy. That means triple-checking every source. It adds 35-40% to the cost-but cuts regulatory risk by 62%, according to FDA data from early 2025.

What’s Next: The Future of Data Quality

The market for LLM data quality tools is exploding. Gartner predicts it’ll hit $4.8 billion by 2026. Companies like Gable AI focus on healthcare. AWS, Azure, and Google offer their own toolkits. Startups are building plug-and-play solutions for teams that don’t want to build their own.

But the real innovation is coming from the training process itself. NVIDIA and others are working on “quality-aware training”-where the model adjusts how it learns based on the quality score of each training example in real time. Imagine a model that pays more attention to clean, well-written text and ignores the noise as it trains. That’s not science fiction. It’s on the roadmap for 2027.

For now, the rule is simple: filter early, filter often, filter smart. Don’t trust one method. Don’t skip human review. And never assume your data is clean just because it came from the web.

Practical Tips for Your Pipeline

- Start with heuristics. They’re free and fast. Use word count, language ratio, and duplicate detection.

- Use n-gram filters for bulk cleanup. FastText is your friend for datasets over 100GB.

- Reserve BERT-style models for the final quality pass. They’re worth the cost for critical data.

- Never use LLM-as-judge on the full dataset. Save it for your top 1%.

- Sample 1% of filtered data for human review. It’s cheap insurance.

- Retrain your filters every 45-60 days. Web content changes. Your filters must too.

- Track what gets filtered out. If you’re losing too many technical docs, adjust your word count rule.

If you’re training an LLM without a data quality pipeline, you’re not building intelligence. You’re building noise.

Man, I just spent 3 hours scrubbing my dataset with heuristics and I swear half the good stuff got tossed. That 5k-word limit? My entire Python textbook series got nuked. I’m gonna tweak it to 8k now. Who knew coding docs were ‘too long’?

lol i just used a script to delete dupes and now my model is way better. no idea what bert is but it works

This is exactly the kind of practical insight the field needs. Too many teams chase shiny LLM tools without fixing the basics first. Heuristics are boring but they’re the backbone. Keep building, keep iterating.

What’s fascinating is how we’ve built an entire pipeline around the illusion of neutrality. We call it ‘data quality’ but what we’re really doing is enforcing a cultural standard - Western, academic, formal. The documents we discard aren’t low-quality. They’re just different. They speak in rhythms we don’t recognize. We’re not filtering noise. We’re silencing voices. And we call it progress.

That 22-27% drop in non-Western content? That’s not a bug. That’s a feature of our training data’s colonial DNA. We didn’t just miss diversity - we engineered it out.

And yet we act shocked when our models can’t handle slang, dialects, or non-linear logic. We trained them on Wikipedia. We didn’t train them on the world.

Maybe the real innovation isn’t better filters. Maybe it’s admitting we don’t know what ‘quality’ means - and letting the data teach us.

Wow. So we’re paying $20k to filter 10TB with an LLM? That’s not AI, that’s a tax on your budget. Use heuristics, move on. Stop overengineering. Your model doesn’t need to be perfect. It just needs to not say ‘the moon is made of cheese’ 80% of the time.

I love how this post balances technical rigor with ethical awareness. Too often, we treat data cleaning like a mechanical process - but it’s deeply human. Every rule we write reflects a value. Every threshold we set favors one kind of voice over another.

That’s why human review isn’t just a safety net - it’s a moral obligation. Sampling 1% isn’t enough if that 1% doesn’t include voices from the Global South, from non-academic forums, from marginalized communities. We need to ask: Who gets to define ‘quality’? And who pays the price when they’re filtered out?

Let’s not just build smarter models. Let’s build fairer ones.

For anyone just starting out: don’t get overwhelmed. Start with the heuristics - word count, language ratio, duplicates. Those alone will clean up 20% of your garbage for free. Then add FastText. Then sample 1% for humans. That’s it. You don’t need an LLM judge on day one. Save that for your crown jewels.

And if you’re losing technical docs? Raise the word limit. If you’re drowning in spam? Tighten the alpha-char threshold. Your filters should adapt to your data, not the other way around.

It’s worth noting that the cascaded approach isn’t just efficient - it’s scalable. But the real challenge lies in maintaining the integrity of the human-reviewed samples over time. Without consistent annotation guidelines and inter-rater reliability checks, the human-in-the-loop becomes a liability, not a safeguard. We must treat human feedback as a data stream - noisy, variable, but essential. Retrain your raters. Document their decisions. And never assume consistency where none exists