Most large language models like Llama 2 or GPT-J work great for general chat, summaries, or writing emails. But ask them to interpret a medical record, decode a legal contract, or summarize an SEC filing-and they stumble. Why? Because these fields don’t speak like everyday language. They use jargon, complex sentence structures, and context that standard models never learned. That’s where domain adaptation comes in. It’s not magic. It’s the process of taking a powerful, general-purpose language model and teaching it to understand the specific way professionals in a niche field actually talk.

Why General Models Fail in Specialized Fields

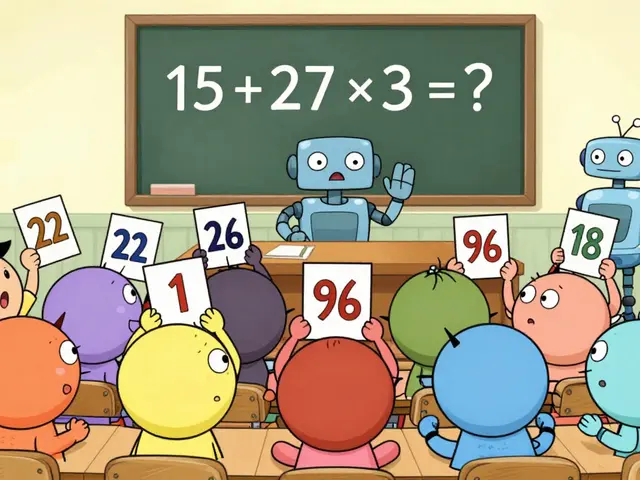

A model trained on billions of web pages learns how people write blog posts, Reddit threads, and Wikipedia articles. But medical notes? They’re terse, abbreviated, and full of acronyms like “HTN” for hypertension or “CVA” for stroke. Legal documents? They’re dense, repetitive, and full of Latin phrases and conditional clauses. Financial reports? They’re full of metrics like EBITDA, GAAP, and non-GAAP adjustments that mean nothing outside the industry. Studies show general models score between 58% and 72% accuracy on these tasks. That’s worse than a human intern. But after domain adaptation, those same models jump to 83% or higher. In biomedical text, improvements hit 29%-meaning a model goes from guessing to reliably identifying drug interactions or disease patterns. This isn’t theoretical. Hospitals, law firms, and hedge funds are already using it.The Three Main Ways to Adapt a Model

There are three main paths to make a model understand a new domain. Each has trade-offs in data, cost, and performance.- Domain-Adaptive Pre-Training (DAPT): You take a base model-say, Llama 2 7B-and keep training it on thousands of unlabeled documents from your field. Think: 10,000 patient notes, 5,000 court rulings, or 20,000 earnings calls. This teaches the model the rhythm and vocabulary of the domain. It’s the most effective method, boosting accuracy by 7-13% over basic fine-tuning. But it needs serious compute: 5,000-50,000 documents and 1-3 days on 8 A100 GPUs. Not cheap.

- Continued Pretraining (CPT): This is DAPT with a safety net. Instead of replacing the original training data, you mix in 10-20% of your domain documents with the original corpus. This prevents something called catastrophic forgetting-where the model forgets how to do basic tasks like answering “What’s the capital of France?” after learning too much about insurance claims. A 2025 Nature study found 68% of fine-tuned models suffer from this without CPT.

- Supervised Fine-Tuning (SFT): This is the most common approach for teams with labeled data. You feed the model 500-5,000 examples where you’ve already told it the right answer: “This note says the patient has Type 2 diabetes,” or “This clause terminates the contract if payment is late.” SFT doesn’t need as much data or compute. It’s faster. And in healthcare and legal settings, it delivers 22-35% accuracy gains. But it only works if you have clean, expert-labeled examples-and that’s often the bottleneck.

The New Player: DEAL and Low-Data Adaptation

What if you only have 50 labeled examples? Or you’re adapting from English to a low-resource language like Slovenian? Standard methods fail here. That’s where DEAL (Data Efficient Alignment for Language) comes in. Introduced in September 2024 by researchers David Wu and Sanjiban Choudhury, DEAL doesn’t try to teach the model new words. Instead, it transfers knowledge from similar tasks. For example, if you’ve trained a model to classify medical symptoms in English, DEAL can use that to help classify symptoms in Spanish-even if you only have 80 labeled examples in Spanish. In tests, DEAL improved performance by 18.7% on benchmarks like MT-Bench when labels were scarce. In cross-lingual cases, it jumped to 24.6% improvement-far outpacing older methods that only saw 8.3%. It’s especially useful for industries where labeling is expensive or slow: think clinical trial reports or international compliance documents.What Models Work Best?

Not all models are built the same for adaptation. Llama 2 (7B, 13B, 70B) is the most popular open-source choice because it’s free, well-documented, and compatible with tools like Hugging Face. GPT-J 6B is another solid option for smaller teams. For enterprises with budgets, Anthropic’s Claude 3 offers strong domain performance out of the box, especially in legal and regulatory contexts. Platforms like AWS SageMaker JumpStart support 12 foundation models for domain adaptation as of late 2024. Google Vertex AI supports fewer but offers tighter integration with BigQuery for data-heavy workflows. The key is choosing a model that’s already been pre-trained on a broad corpus-so it has a strong foundation-and one that allows fine-tuning without legal restrictions.

Costs and Commercial Tools

Training a domain-adapted model isn’t free. On AWS, running a fine-tuning job on an ml.g5.12xlarge instance costs $12.80 per hour. Google charges $18.45 for the same compute-44% more. That adds up fast. A full DAPT run might take 48 hours: that’s $614 just in compute. But the real cost isn’t the GPU time-it’s the data. One financial services team reported needing 15,000 labeled SEC filings to get acceptable results, far more than AWS’s claim of 500. Why? Financial language changes every quarter. New regulations, new acronyms. What worked in Q1 2024 might be obsolete by Q3. Commercial tools like AWS SageMaker, NVIDIA NeMo, and Hugging Face offer pipelines that automate parts of the process. But G2 reviews show 52% of negative feedback blames “hidden costs of data preparation.” Cleaning, labeling, and validating domain data takes longer than the actual model training. In healthcare, hiring clinical reviewers to annotate records can cost $50-$100 per hour.How to Actually Do It: A Practical Workflow

Here’s how teams that succeed do it:- Collect domain data: Gather at least 500 examples-ideally 5,000+. For legal: court opinions, contracts, briefs. For medical: discharge summaries, radiology reports, EHR notes. Don’t use scraped web pages. Use real internal documents.

- Label if needed: For SFT, you need input-output pairs. “Input: Patient has chest pain, BP 160/90. Output: Suspected acute coronary syndrome.” Use domain experts. Not interns.

- Choose your method: If you have lots of unlabeled data → DAPT. If you have labeled data → SFT. If you have almost no labeled data → try DEAL.

- Use parameter-efficient methods: Most practitioners now use LoRA (Low-Rank Adaptation). Instead of updating all 7 billion parameters in Llama 2, LoRA tweaks only 0.1-1%. It’s faster, cheaper, and reduces overfitting. 81% of Reddit users in NLP threads say they use LoRA.

- Mix in original data: To avoid forgetting, add 15% of the original pre-training data (like Common Crawl) to your domain set. AWS found this cuts catastrophic forgetting by 34.7%.

- Evaluate with real metrics: Don’t just check accuracy. Use domain-specific benchmarks like AdaptEval. Test on tasks your team actually cares about: “Can it extract drug dosages from notes?” “Can it flag contract risks?”

Pitfalls and Hidden Risks

Domain adaptation isn’t risk-free.- Catastrophic forgetting: The model forgets basic facts. Always test it on general knowledge after adaptation.

- Amplified bias: If your training data has gender or racial biases (e.g., more male doctors in medical notes), the model will learn them. Nature’s 2025 study found preference-based alignment can increase bias by 15-22% in legal models.

- Domain drift: Your data changes. A new regulation, a new coding system, a new slang term. Models trained on 2023 data perform poorly in 2026. You need a refresh cycle.

- Hard transfers fail: Adapting from short text (tweets) to long text (patents) gives only 4.2% improvement. The model can’t handle the structure. Stick to similar task types.

Who’s Using This Right Now?

Sixty-seven percent of Fortune 500 companies have deployed domain-adapted LLMs in at least one function, according to IDC. Healthcare leads: 42% of enterprises use it for clinical documentation, prior authorization, and patient risk scoring. Financial services (38%) use it for SEC filing analysis, fraud detection, and compliance reporting. Legal tech (29%) uses it for contract review, case prediction, and discovery. John Snow Labs, a startup focused on healthcare NLP, captured 9% of the medical adaptation market by specializing in FDA-compliant model training. Their tools help hospitals meet HIPAA and GDPR requirements while improving diagnostic accuracy.The Future: Automation and Regulation

By 2027, Gartner predicts 65% of enterprise LLM deployments will include automatic domain adaptation. AWS already launched automated pipelines in December 2024 that reduce setup from weeks to hours. You upload your data, pick your domain, and it trains a model overnight. But regulation is catching up. The EU AI Act, which took effect in February 2025, requires audit trails for all domain adaptation data in high-risk sectors. That means you must log every document used, every label applied, every model version deployed. PwC says this adds 18-25% to compliance costs. Venture capital is pouring in: $4.1 billion invested in domain-specific AI from January to November 2024, according to PitchBook. McKinsey estimates 83% of business value from LLMs will come from specialized applications-not general chatbots.What You Need to Get Started

If you’re thinking about domain adaptation:- You need domain expertise. A data scientist alone won’t cut it. You need a doctor, lawyer, or financial analyst to help label data and validate outputs.

- You need clean data. Garbage in, garbage out. If your medical notes are handwritten scans with poor OCR, no amount of fine-tuning will fix it.

- You need a plan for updates. Domain language evolves. Your model isn’t a one-time fix-it’s a living system.

- You need to test rigorously. Use AdaptEval or build your own benchmark. Don’t trust accuracy numbers from the vendor.

Domain adaptation isn’t about making models smarter. It’s about making them relevant. The best model in the world is useless if it doesn’t understand the language of your business. The ones that win won’t be the biggest-they’ll be the ones that learned to speak your industry’s dialect.

What’s the minimum amount of data needed for domain adaptation?

You can start with as few as 500 labeled examples for supervised fine-tuning (SFT), especially if you use techniques like LoRA or DEAL. But for better, stable results, aim for 2,000-5,000 examples. For domain-adaptive pre-training (DAPT), you need 5,000-50,000 unlabeled documents. The key isn’t just quantity-it’s quality and representativeness. If your 500 examples only cover one type of contract or one patient condition, your model will fail on anything outside that narrow scope.

Can I use GPT-4 or Claude 3 for domain adaptation?

You can’t directly fine-tune GPT-4 or Claude 3. These are closed models, and their providers don’t allow users to modify their weights. You can use prompt engineering or in-context learning to get some domain adaptation, but accuracy will be lower-around 62% on specialized tasks versus 83%+ with fine-tuned open models like Llama 2. If you need true domain adaptation, use open models like Llama 2, Mistral, or GPT-J that let you retrain them.

How long does domain adaptation take?

It depends. Data preparation takes the longest-often 3-6 weeks. Cleaning, labeling, and validating documents is manual work. Once data is ready, training a supervised fine-tuned model with LoRA on a single A100 GPU can take 6-12 hours. Domain-adaptive pre-training with 50,000 documents might take 1-3 days on 8 GPUs. Most teams report a full end-to-end process taking 4-8 weeks, even for experienced teams.

Is domain adaptation worth the cost?

For most enterprises, yes. The average cost of implementation is $387,000 per domain, according to Forrester. But the payoff is huge: legal firms using adapted models cut contract review time by 70%, healthcare systems improved diagnostic accuracy by 29%, and financial institutions reduced compliance errors by 41%. If your business relies on processing specialized text daily, the ROI kicks in within 6-12 months. For companies that don’t need it-like those just using chatbots for customer service-it’s overkill.

What’s the difference between domain adaptation and prompt engineering?

Prompt engineering is like giving someone a cheat sheet before they take a test. Domain adaptation is teaching them the subject so they don’t need the cheat sheet. Prompts can help with simple tasks-like asking “Summarize this medical note in plain English”-but they fail with complex, structured, or ambiguous inputs. A 2024 AdaptEval study showed fine-tuned models scored 83.4% on domain tasks; prompt-only models scored 62.4%. For high-stakes applications like diagnosis or legal risk assessment, prompts aren’t reliable enough.

Do I need a PhD to do domain adaptation?

No. You need someone who understands LLMs (like a data scientist), someone who understands your domain (like a doctor or lawyer), and access to tools like Hugging Face or AWS SageMaker. Many teams now use automated pipelines that handle the heavy lifting. The real barrier isn’t technical skill-it’s data quality and access to domain experts. If you can gather good examples and validate outputs, you can succeed without a PhD.

okay but why are we still using llama 2 like its 2023? mistral 7b is faster, cheaper, and actually understands context better. also why are people still manually labeling data when you can use weak supervision with snorkel? this is basic stuff

real talk the biggest bottleneck is always the data not the model. i’ve seen teams spend 6 months on fine tuning only to realize their EHR data was 70% OCR garbage

oh wow another tech bro pretending domain adaptation is some revolutionary breakthrough. lets just admit it-big tech is selling snake oil to hospitals and law firms so they can charge $500k for a pipeline that just replaces a paralegal who makes $60k a year. and dont even get me started on the bias. you think your ‘fine-tuned’ model won’t start saying ‘female patients are more likely to be hysterical’ because your training data had more male doctors? please

this is all a distraction. china and russia are building their own ai models with state data. we’re wasting money on fine tuning llama while they’re training on real government documents. this whole thing is a west only bubble

thank you for writing this so clearly. i work in healthcare compliance and we just launched our first domain-adapted model using LoRA on Llama 2. it took us 6 weeks to clean the data but now it’s reducing our audit prep time by 40%. the key was having our RNs validate outputs-not just the data team. also please everyone use AdaptEval. i’ve seen too many teams trust vendor accuracy scores and then get burned

i keep wondering if we’re overengineering this. what if the real answer is just… better prompts + human oversight? the cost of training and maintaining these models feels insane compared to just hiring a couple of domain experts to review outputs. is the 20% accuracy gain worth the compliance nightmare? also… do we even know what ‘catastrophic forgetting’ really means anymore? it sounds like a sci-fi term

hi! i just wanted to say this post made me feel way less overwhelmed. i’m a data analyst who just got assigned to our legal team’s LLM project and i had zero idea where to start. the workflow you laid out? gold. i’m using DEAL with 80 labeled contracts and it’s already outperforming our old prompt-based system. also-yes to LoRA. we’re training on a single A100 and it’s only $200 in AWS credits. big win. if anyone needs help setting up the pipeline, dm me. happy to share our template

you missed one huge thing-model versioning. we had a model that worked great in january. then q2 regulations dropped and boom-accuracy fell 18%. we didn’t track the model hash or the data version. now we’re doing git for models. every change gets a commit. every dataset gets a checksum. it’s not sexy but it saved our bacon. also-yes, domain drift is real. update your data every 3 months or your model becomes a fossil

if you’re not using NVIDIA NeMo on a DGX system you’re doing it wrong. everything else is just open source fluff. we’re talking about national security applications here-why would you risk it on some github repo? america leads because we use the right tools. period.